Virtualization and Cloud Provider Comparison

Reading time ~25 minutes

This post is a slightly amended version of the presentation that we gave at TechCon 2017. This post intends to provide some general information about virtualization and the technologies involved, to give a comparison of the main software options, and to finish up with cloud providers.

The topic of "virtualization" is the one that we at Cinegy have been asked about with increasing frequency, and it generally starts with something along the lines of "We want to run your software in the cloud".

Usually, when we ask some more questions, this turns into wanting to run the Cinegy software as a part of a virtualization stack within a locally managed datacenter. For this reason, the bulk of the information in this post will be focused on more traditional virtualization offerings.

Virtualization is not a new thing in the IT industry; even though it still seems to be thought of as such, it has been around since the late 1990s when VMWare was established.

Virtualization Core Terms

We are going to start by covering some of the core terms that are used when talking about virtualization, just to ensure everyone is on the same page.

Host

A host is a physical machine that will provide resources for the system. It does this by having a piece of software running on it that provides this mechanism.

Hypervisor

A hypervisor provides dynamic access mechanisms for virtual machines (VMs) to an underlying physical server and ensures that they each have access to the resources that they have been allocated.

There are two main types of hypervisors:

-

Bare-metal or type 1 ‒ runs directly on the host hardware, which includes VMware’s ESX (ESXi), Microsoft’s Hyper-V, and Citrix’s Xen.

-

Hosted or type 2 ‒ runs like any other application on the operating system. These are applications like VMware Workstation, VMware Player, and VirtualBox.

A slight anomaly to these groups is products, such as Linux KVM (Kernel Virtual Machine), which effectively convert the host’s operating system into a bare-metal hypervisor. However, other applications running on the same OS can still compete for resources, so this means they are more akin to a hosted or type 2 model.

Guest

Obviously, this refers to the virtualized machines that are running on the host. These are self-contained units, which are replicated physical machines running a supported OS and applications.

Virtualization Technologies

Next, we will cover some of the main technologies that are used with VMs.

Snapshots/Checkpoints

Snapshots, or Checkpoints as Microsoft calls them, are point-in-time captures of the state of the VM, which stores information about the machine’s disk, RAM, devices, etc., and allows you to return to that point, removing all changes made after it. These can be merged into the running machine once the changes made are deemed to be successful, thereby freeing up the consumed storage space.

Migration

This generally refers to live migration, where a running VM is moved from one physical host to another with no downtime. All the major vendors offer this functionality, although it can be at an additional cost. This term can also be used to refer to the process of moving a VM from one machine to another by shutting the VM down first. You are also able to migrate the computer part of the VM separately to the storage part of the VM if you wish.

Clone

This is simply a VM that has been copied from another one and is an independent VM with no ties to the original or parent VM. Clones are an exact copy of a VM and therefore contain all the OS settings, etc., that the parent did.

Template

So, finally, we come to templates. Templates differ from clones in their usage ‒ they are a static image that cannot be powered on, and are more difficult to edit. This allows you to have, for example, a Windows Server template which contains a known good installation of the OS with all the required security updates and management tools, etc. installed. You can use this template to launch clone VMs, which then only need to have applications installed. A template can be converted back into a VM and have new patches installed, and then converted back to keep it up to date.

Hardware-Assisted Virtualization

Hardware-assisted virtualization is a common term used to talk about the technologies that have been added to system architectures, such as Intel and AMD processors, system board chipsets, etc. This was done to reduce the software overhead of VMs when addressing hardware such as network cards to support more PCIe devices in the guest OS and to streamline IO from the host machines.

Over 10 years ago, Intel released the first two CPUs that supported VT-x, which were Pentium 4s. AMD followed not long after in 2006 when they released their Athlon 64 processors that supported AMD-V. These technologies have been enhanced and extended since then to bring us to where we are now.

Intel VT-x

This technology is now present in almost all of Intel’s current processor lines. Among the enhancements that have been added are the following:

-

Extended Page Tables (EPT), added in 2008, are the second version of Intel’s Memory Management Unit and gave massive improvements to the performance of MMU-intensive tasks.

-

Unrestrictive Guests, an Intel term for launching a virtual processor in "real" mode, support for which was added in 2010.

-

Advanced Programmable Interrupt Controller (APICv) became available in late 2013/early 2014 and was brought in to reduce interrupt overhead.

AMD-V

AMD has also increased the capabilities of its own version of the technology:

-

Rapid Virtualization Indexing was added to the Opteron line of processors in 2008 and is AMD’s version of extended page tables.

-

AMD’s version of virtualized APIC wasn’t released until last year under the name AVCI.

I/O MMU Virtualization

The next big change was I/O memory management unit virtualization, which allows guest machines to directly access peripheral devices like network and graphics cards. This gets referred to as PCI pass-through most of the time.

This technology needs to be supported by the processor, system board, BIOS, and the PCI device to function, however.

Intel and AMD’s brand names are Intel VT-d and AMD-Vi, respectively.

Single Root I/O Virtualization (SR-IOV)

Lastly, we have SR-IOV, which allows the hypervisor to basically create a copy of a device’s configuration, and the guest OS can then directly configure and access this copy. This removes the need for the VM manager to get involved and gives large gains in things like network throughput. This is what Amazon refers to as enhanced networking in their EC2 instances.

What this all means is that if you use modern hardware that has been selected to support these technologies, then not only do you get more performance out of your VMs, but you get access to more features in the hypervisor and the options to support more workloads.

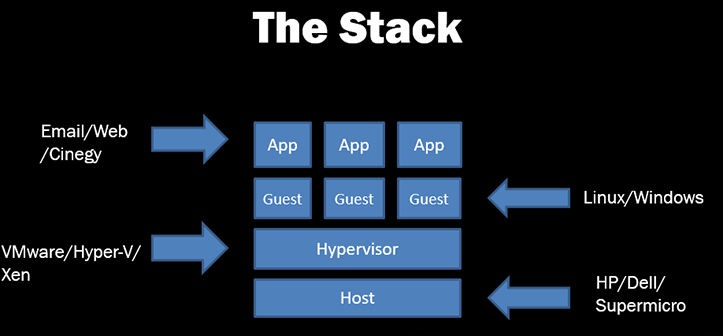

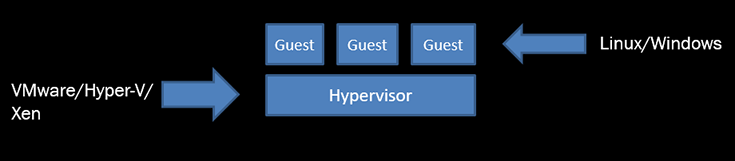

The Stack

We come to the standard virtualization stack.

A host at the bottom, which is a physical server box or blade-type server in a chassis, and contains the virtualization technologies that were talked about.

Running on this is the hypervisor, such as ESX, Hyper-V, or Xen, etc.

Then we have our various guest operating systems, which can be Windows or Linux, etc.

Finally, we have the applications or services that guests are providing, such as email, web, Cinegy, etc.

What Do I Get

What benefits does virtualization offer you, and why would you deploy these types of environments?

Better Resource Utilization

The first benefit is better resource utilization. You don’t end up with some server CPUs running at only 5% or 10% load and others running at 80% or 90%. By consolidating matching workloads onto the same host, you can average out the CPU usage.

Storage is better utilized as well through thin provisioning of virtual hard disks, which means that only the space required at that point in time is allocated. The use of shared storage not only allows additional capacity to be easily provisioned for a VM, but also means that storage is used as efficiently as possible.

Dynamic Resource Allocation

The ability to react to changes in server load by increasing CPU, memory, or storage allocations, sometimes on the fly or through a short shutdown and power up of a VM, means no lengthy downtimes to install more RAM or hard disks into a server.

Deployment Speed

Increased speed of additional machines deployment using cloning and templates of VMs, and by removing the need to buy a new server and then deploy it into your datacenter. This allows things like testing of new software versions or new projects to happen quickly.

Better Availability/Better Disaster Recovery

There are various ways of ensuring you have increased availability of your services.

You don’t need to shut down a service if you need to increase the capacity of the underlying host or if you need to deploy a new host.

Clustering the hosts together allows you to use shared resource pools and gives you the ability to allocate a certain quantity of resources to a department, for example. You can usually live-migrate the VMs from one host to another with no downtime.

If you have clustered your hosts together and have the management tools to support it, you can even have the hypervisors automatically move VMs around to ensure that the load on the hosts doesn’t exceed an acceptable level.

You can have VMs on two different hosts be mirrors of each other so that any changes made to the primary are automatically played onto the mirror, and so if a host were to go down, you can have a fully automated, seamless failover. The mirroring can even be done between datacenters, so you can have your DR site be an up-to-date version of the live servers.

Energy Savings

The other benefits are energy savings in the datacenter, as you have fewer physical boxes running and not only drawing less power but also a reduction in the cooling needs.

Staff Productivity

Finally, you have fewer boxes to manage in terms of patching, replacing failed parts, and deploying replacement boxes, etc., which in turn allows staff to focus on other areas.

The Offerings

Now we move on to talking about the various offerings available in the marketplace. We are going to concentrate on the three main vendors in this area, which are:

Obviously, as mentioned before, there are others in this space, such as the Linux KVM platform. However, this doesn’t tend to get used in the same large-scale deployments as these three and doesn’t come with the same type of features, so we won’t be including it here today.

VMware

For a long time, when people talked about virtualization, they would be talking about VMware because of the length of time they have been around in this space, and were viewed as the only real enterprise option.

Because they have been in this area of the industry for a long period, they have 50 products that are individually listed on their website. It isn’t possible for me to talk about these in any meaningful way, so we are going to focus on the products that cover the areas that we get the most queries about.

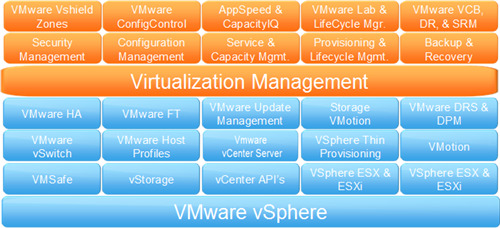

This means that we will be focusing on vSphere and the management platform vCenter. We will also be using version 6.5 of vSphere, released in November 2016, in terms of capabilities and OS compatibilities, etc.

Even just focusing on these two products means that we must consider all the following:

Microsoft

Microsoft’s offering is reasonably recent in comparison to VMware’s. Hyper-V first became available with their Server 2008 product.

It has been enhanced since then with the release of Server 2008 R2, 2012, 2012 R2, and finally 2016.

You can either add Hyper-V as a role to an installation of a server, as you would with DNS, or make it a domain controller, or you can install it as a standalone product simply called Hyper-V Server. The main difference with this is that you don’t get a GUI with the Hyper-V Server, the same as Server Core mode, and all you get are a hypervisor, driver model, and virtualization components.

You can also run a Nano server (which has been introduced with Server 2016) version of Hyper-V, but this brings a lot more changes, as this is a very minimal footprint version of the OS. You get no local login capacity and can only use 64-bit versions of tools, etc., for example, but the installation size is under 1GB.

Citrix

They acquired Xen in October 2007 for about 500 million dollars.

Xen originally started in the late 1990s at Cambridge University, and the first public release was made in 2003.

The latest version 7 was released in May 2016, which brought better Windows support along with some other enhancements.

It is used by people like Amazon to run their EC2 platform and Rackspace for their Rackspace Cloud offering.

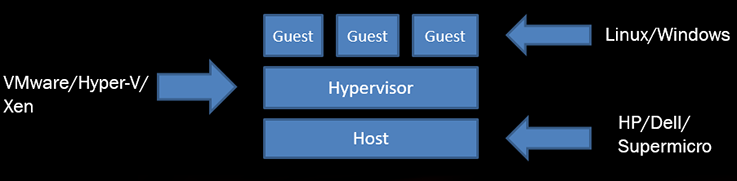

Return to the Stack

If we use our stack model from earlier, then we can compare the offerings at each of these levels to give us some idea of the differences between them.

At the bottom, we had the host or physical box, so we will start by comparing the three offerings at this level.

We will start with Microsoft, as they have the most general of requirements.

Hyper-V Host

The main one is that the processor(s) in the server are 64-bit and support second-level address translation (SLAT). This would be extended page tables for Intel CPUs and rapid virtualization indexing on AMD processors. Without this support, you are not able to install either the Hyper-V server or the Hyper-V role.

After this, it is down to the requirements of the VMs you intend to deploy into your environment.

So, how many and what type of CPUs do you need?

-

Intel E3, E5, E7

Do you want more cores at a lower speed?

-

4, 6, 8, 10, 12, 14, 16, 18, 20, 22, 24 cores

How much memory do you need and what speed?

-

256GB, 512GB, 2TB, 4TB

What I/O bandwidth do you need for the hosts?

-

1Gb, 10Gb, 12Gb, 40Gb

Will you use converged infrastructure to minimize connections to the hosts?

vSphere Host

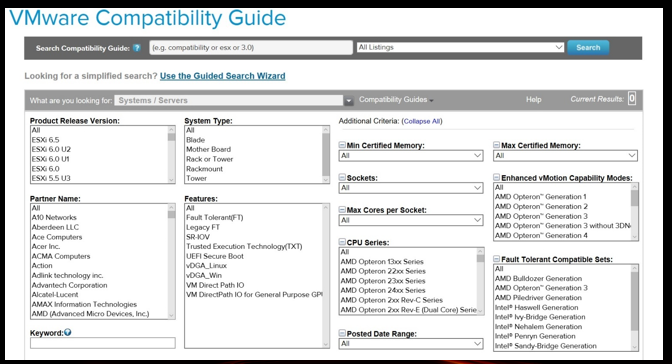

VMware ESX is tested for compatibility with currently shipping platforms from the major server manufacturers in the pre-release testing phase, and this means that they have many products that they support.

To enable people to find a supported platform that suits their needs, VMware provides a compatibility lookup guide on their website, or if you like, you can look it up in their 736-page PDF.

For Intel and AMD processors, VMware supports the processor series and the processor model that are listed with each server.

In addition to this, there is a list of community and individual vendor submissions that have been reported to work with VMware.

There are also partner-verified and supported products (PVSP) for items that cannot be verified through any existing VMWare method, and the vendor provides the certification and support for any technical issues.

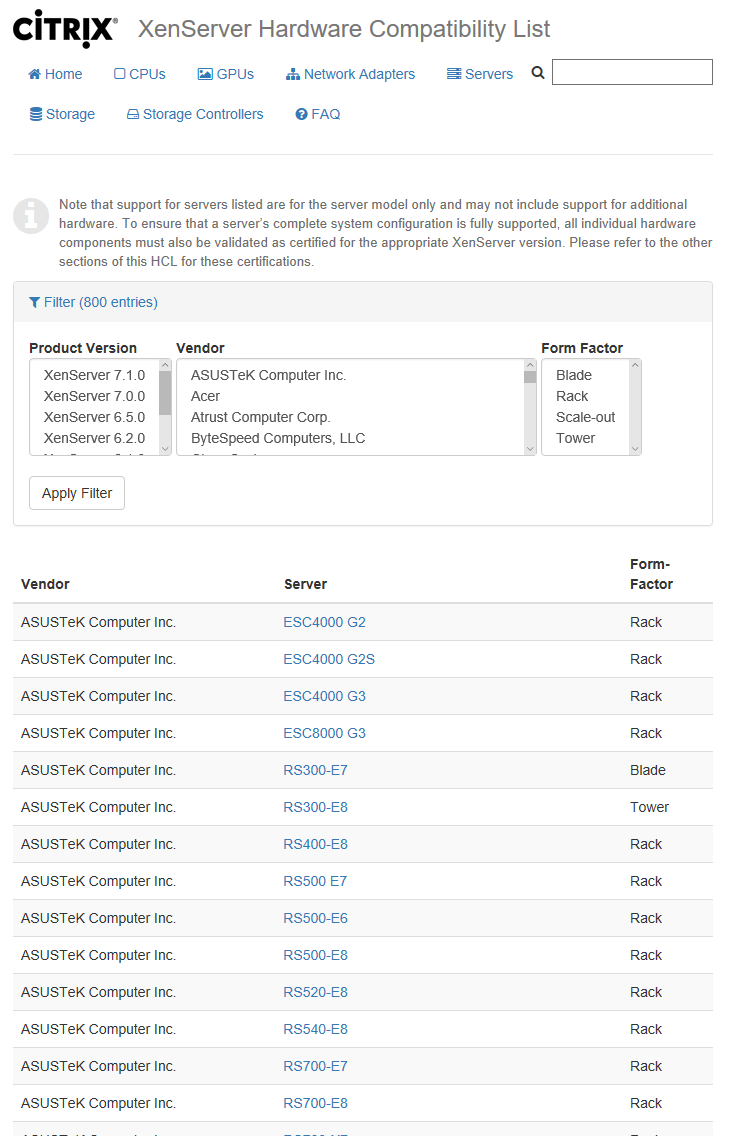

XenSever Host

Citrix offers a similar guide to VMware on their hardware compatibility website, which allows you to filter for results based on what you need to check support for: CPUs, GPUs, Servers, Storage, etc.

For example, there are 800 entries on the server list before any additional filters are applied.

In addition to this, there is a community-verified website listing configurations that work with Citrix, and if you are a Citrix partner, you can use their Citrix-ready verification platform to validate your hardware against XenServer.

There is even a Citrix-Ready Marketplace that allows you to browse for peripherals, servers, and software that have been tested with Citrix.

Host Choice

Choosing hardware that you wish to use for your virtualization platform should be reasonably straightforward.

There is plenty of information to allow you to ensure that the hardware will be suitable and compatible with XenServer and vSphere, and their compatibility guides.

Microsoft ‒ you need to make sure you have the correct hardware capabilities in the CPU, system board, and peripherals to allow you to use the features you wish and install the product.

VMware and Citrix have specific community resources for additional compatibility information, which can be a great help.

Citrix offers a self-certification scheme for hardware vendors, so you could ask them to go through this process for you.

VMWare also has partner-verified and supported products, which, although they don’t have many items, could also be helpful.

Citrix also has its Citrix-Ready Marketplace covering a wide range of both hardware and software.

The Stack Again

Our stack picture again, and we are now moving up to the Hypervisor level.

The next level up on our stack is the hypervisor.

Now this is either a straight installation, such as vSphere, XenServer, or Hyper-V Server, or the addition of the Hyper-V role to an installation of Windows Server 2016.

Hyper-V Hypervisor

We are going to list out some of the available features of the hypervisor. You will get the best availability of features with generation 2 VMs running the latest versions of the guest operating system. Also, some features rely on hardware capabilities or associated infrastructure.

-

Checkpoints – any supported guest OS.

-

Replication – any supported guest OS.

-

Hot Add/Removal of Memory – Windows Server 2016 and Windows 10.

-

Live Migration – any supported guest OS.

-

SR-IOV – 64-bit Windows guests from Windows Server 2012 and Windows 8 upwards.

-

Discrete Device Assignment – Windows Server 2016, Windows 10, and Windows Server 2012 R2 with an update.

vSphere Hypervisor

vSphere is VMware’s product, which uses its ESX hypervisor.

This comes in three different versions that offer access to different features and different maximum amounts. These are Standard, Enterprise Plus, and Operations Management Enterprise Plus.

We will list some of the features again, and highlight what the differences may be with the different versions of vSphere.

Some of the features are:

-

vMotion – a live VM migration. Enterprise Plus and Ops Management Enterprise Plus give you long-distance options, such as between different datacenters.

-

vSphere Replication – as the name suggests, this is a VM data replication over LAN or WAN.

-

Distributed Resource Scheduler (DRS) – automatic load balancing across hosts – only in Enterprise Plus and Ops Management Enterprise Plus.

-

SR-IOV – only in Enterprise Plus and Ops Management Enterprise Plus.

-

NVIDIA GRID – allows the use of a GPU in VMs directly. Only in Enterprise Plus and Ops Management Enterprise Plus.

Xen Hypervisor

The Xen hypervisor comes in two versions: standard or enterprise.

-

Dynamic Memory Control (DMC) – automatically adjusts the amount of memory available for use by a guest VM’s operating system. By specifying minimum and maximum memory values, a greater density of VMs per host server is permitted.

-

Heterogeneous Resource Pools – allows the addition of new hosts and CPUs, which are different models, to the existing ones in the pool, but still supports all the VM-level features.

-

XenMotion – live VM migration of the compute part of VMs between hosts in a resource pool.

-

Storage XenMotion – live migration of a VM’s storage without touching the compute part to allow storage resource reallocation.

-

GPU Passthrough – allow VM to use a GPU on a 1-to-1 basis.

One Last Time to the Stack

Our stack picture again, and we are now moving up to the guest level:

Guest OS Support

Obviously, Hyper-V supports a lot of Windows versions. vSphere and XenServer also have similar levels of support.

Hyper-V also now supports a wide variety of Linux distributions. To make the most of the features, it is best to use Linux Integration Services or the FreeBSD Integration Services drivers collection. This has been integrated into the kernel in new releases and is updated.

vSphere and XenServer support for Linux is better than Hyper-V, but there are only some minor differences in the distribution list.

We are not going to list out all the Linux versions supported, but you can run distributions from:

-

CentOS

-

Red Hat

-

Debian

-

Ubuntu

-

SUSE

-

FreeBSD

Again, the newest version of these OS’s allows the use of the largest number of features from the hypervisor.

Hypervisor Choice

There are four key factors affecting your possible choice of hypervisor to use:

-

Your current environment – whether you are a Microsoft house user already, have an AD deployed, a Windows Update system, etc. in place, or you are using a predominantly Linux-based environment.

-

What features do you need from the system, some of which we have covered?

-

The licensing costs which we will go over next, as the method of licensing varies between the products.

-

What OS’s will you be running in the VMs?

We have looked at the features and what guest OSs are available for the hypervisors. Now let’s look at some number comparisons.

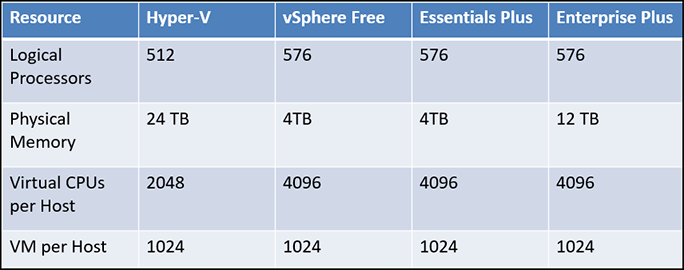

Some Host Numbers

Here we have some of the numbers for the maximums supported on hosts for some of the hypervisor versions. We have included 3 versions for vSphere as they represent different price points for deployment.

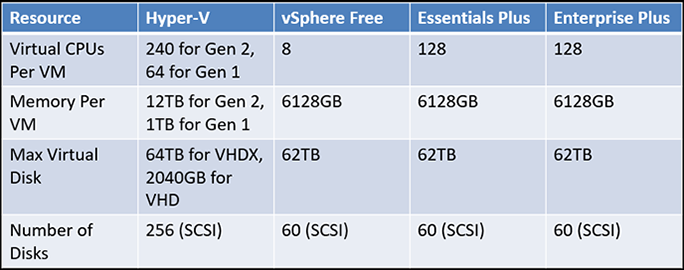

Some Guest Numbers

Next, we have some of the maximums that are possible for guests running on the hypervisors.

Weight It Up

So, we have covered the capabilities of the hypervisors and some of the maximums that are possible with them. The last comparison method that we can use is the cost.

-

Hyper-V licensing costs:

-

$6,155 for Datacenter for 16-core pack

-

Unlimited guest VMs

-

$882 for Standard for 16 core pack

-

Only 2 guest VMs

-

-

System Center 2016

-

$3,607 for Datacenter for 16-core pack

-

Total cost for Hyper-V datacenter with management is $9,762 for a 2 processor – 16-core model, which would allow you to run as many VMs as the box would support along with management of them.

-

vSphere Enterprise Plus Licensing Costs

-

$6085 per processor with basic support

-

$6287 with production support

-

-

vCenter Server Standard Cost – $6085

-

Production Support Cost – $1625

Total cost for the Enterprise Plus version of vSphere, which gives us the graphics pass-through feature we want on a 1 CPU server with 24x7 support and a management server to go along with it, is $13,997.

For a 2 CPU server, we add on another $6000 to the price, which gives us a total of basically $20,000, which is double the Hyper-V cost.

Obviously, Enterprise Plus is the most expensive version of vSphere available, but this is the only version that provides the graphics pass-through capability.

Which One Should I Pick?

It depends! Some of the questions that you need to ask are:

-

Are you running a Microsoft or Linux environment now, for example?

-

What type of server hardware are you using?

-

Would a per-processor or per-core licensing model work best for you?

-

Is there one feature that you need from the virtualization platform?

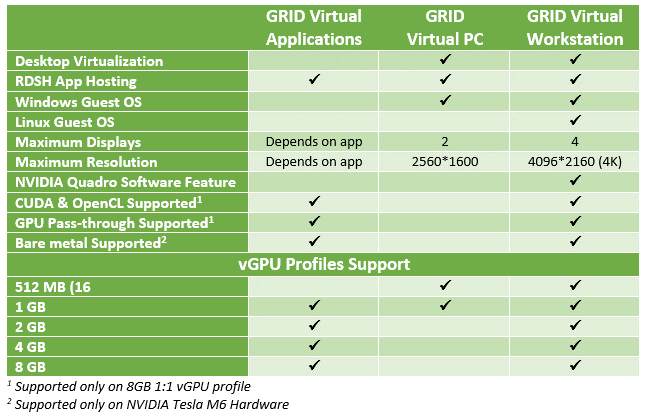

NVIDIA GRID 2

One of the aspects that needs to be considered when running Cinegy in a virtualization environment is that you will want to make use of our H.264 offloading as much as possible to maximize the software capabilities.

NVIDIA brought in some changes when it introduced the GRID 2.0 initiative.

The three software licensing models are virtual applications, virtual PC, and virtual workstation:

-

Virtual Applications – a new model introduced with 2.0. It is for companies that want to run things like Citrix XenApp and Microsoft Remote Desktop Session Host (RDSH). "Virtual applications" allow a better user experience for applications running in a Windows environment, for example.

-

Virtual PC – for a complete virtual desktop environment.

-

Virtual Workstation – for professional graphics usage.

They introduced a new licensing cost, which originally brought some consternation from the community due to the amounts. This has been revised down since then to a lower level.

Each of these costs is for a concurrent user license, which you need 1 of per active session on the GPU.

Annual subscription includes license and Support, Updates, and Maintenance (SUMS) for that year.

The perpetual license is just that, the license for ever, but SUMS is only required in the first year and then is purchasable annually.

For a VM environment, you are going to need a Virtual Workstation license to give GPU pass-through and allow access to CUDA and OpenCL.

Finally, you have the hardware platform:

-

The M10 is user-density optimized and has 4 Maxwell GPUs with a total RAM of 32GB.

-

The M60 is 2 Maxwell GPUs with 16GB of RAM, which is the performance-optimized option.

-

The M6 is a 1 Maxwell GPU with 8GB of RAM, which is the blade server compatible option, as this comes in the MXM form factor, whereas the other two are PCIe 3.0 dual-slot.

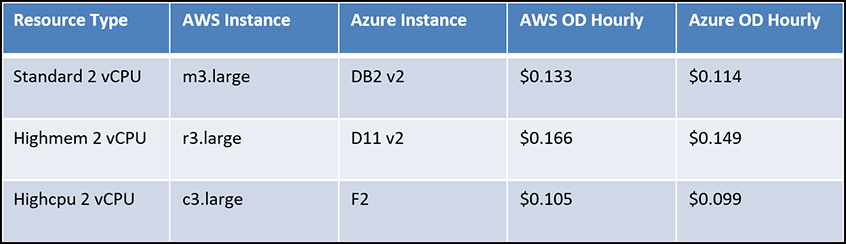

AWS VS Azure

The two main Cloud providers that people tend to use are Amazon and Microsoft. Whilst Google does have its own offering, in our experience, customers don’t tend to consider it.

For an apples-to-apples type comparison, it is best to use the cost of compute services from the public cloud providers. This tends to make up the biggest chunk of spend for people in those environments.

These instances are running Linux in the US-East region with SSD storage. The underlying hardware is:

-

M3.large – e5-2670 v2 + 7.5GB of RAM

-

R3.large – e5-2670 v2 +15.25GB of RAM

-

C3.large – e5-2680-v2 + 3.75GB of RAM

So, here we can see that using the on-demand costs of Azure comes out as cheaper for each of these types.

You can improve these costs by using things like Reserved Instances for AWS or, if you have a Microsoft Enterprise Agreement, for Azure. However, these lock you in to a certain degree.

Changing over to running Windows on these instances attracts a premium from each of the vendors, as you would expect. The increase per year for the two vendors is about $1,100.

The other comparison that can be used is the level of services available per region. GPU-based instances, for example, are not available in AWS’s newest regions, such as Mumbai and Canada, and for Azure, the availability is even less, with only some of the USA regions having availability.

Cloud Lessons

What have we learnt from our time of putting things into the cloud and the conversation we have had with customers?

One of the main ones is the level of drift you can get with the clocks of the machines running.

The second one, which has shown itself to pose a challenge, is a packet loss. Because cloud providers use software-defined networking, which must be reconfigurable to provide the connectivity required for the various VMs, the packet loss is something that should be factored in. This is a problem when using UDP for your traffic, and packets need to arrive in the same order as sent. Some numbers demonstrating this are shown below.

Having said that, you should build for failure. It is possible that some packets might get lost, it is possible that an underlying host will go down taking your instance with it. It is possible that your instance will develop a fault and become unresponsive.

As we mentioned earlier, you need to check that the service you want to use is available in the areas of the world that you wish to deploy into. The GPU-backed instance is one example of this.

Don’t think that because you can’t do something in the cloud today that you won’t be able to do it in the cloud next month. New services are being added by the providers at quite a high rate, and existing services are being enhanced or added to as well.

Keeping up with all the changes that this technology brings is quite the task.

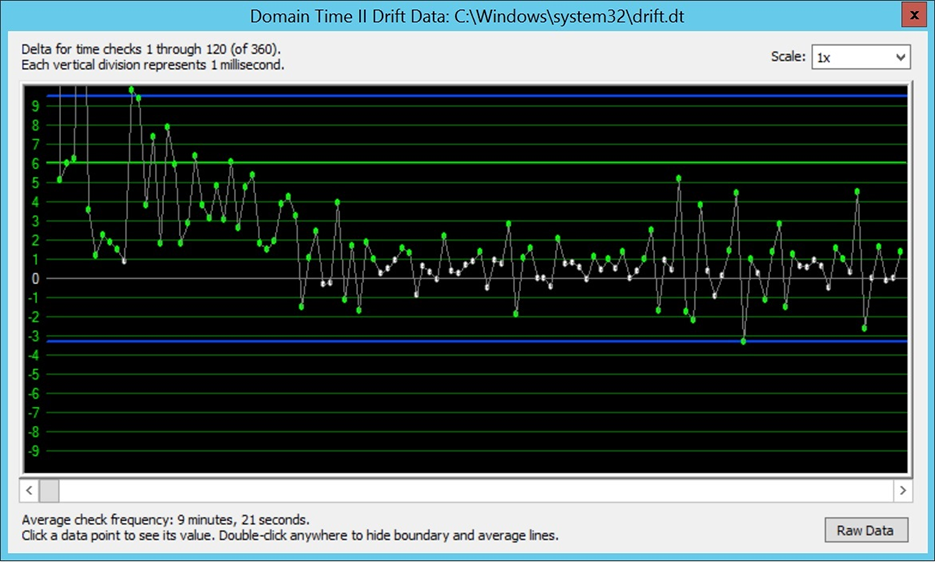

Clock Drift

In this type of environment, you need to rely on either the underlying real-time clock of the hosts or sync your OS clock against another accurate time source.

There are various methods of ensuring the accuracy of the OS clock, and one of these is to use a third-party application that runs on your machine and ensure the accuracy.

This is the drift graph of the Domain Time client running on a standard G2 instance in the Frankfurt region.

It is set to query the various NTP server pools run by Amazon and to achieve a target maximum of 5 ms of difference for this machine.

As you can see, when software starts, there is a large amount of difference between the system clock and the reference servers, and whilst this is brought down, it still takes quite a few checks before the clock is within the configured limit.

We then move into a period of minor adjustments needed and relative stability, then again, some larger variance in the clock.

This is something of great importance when you are running playout from the cloud to ensure that the packets leave the machine in a consistent manner. It also allows sending and receiving machines to agree on the packets sent.

The two blue lines are the upper and lower limits of the difference detected, and the green line is the current average offset that the machine clock has to the reference clock.

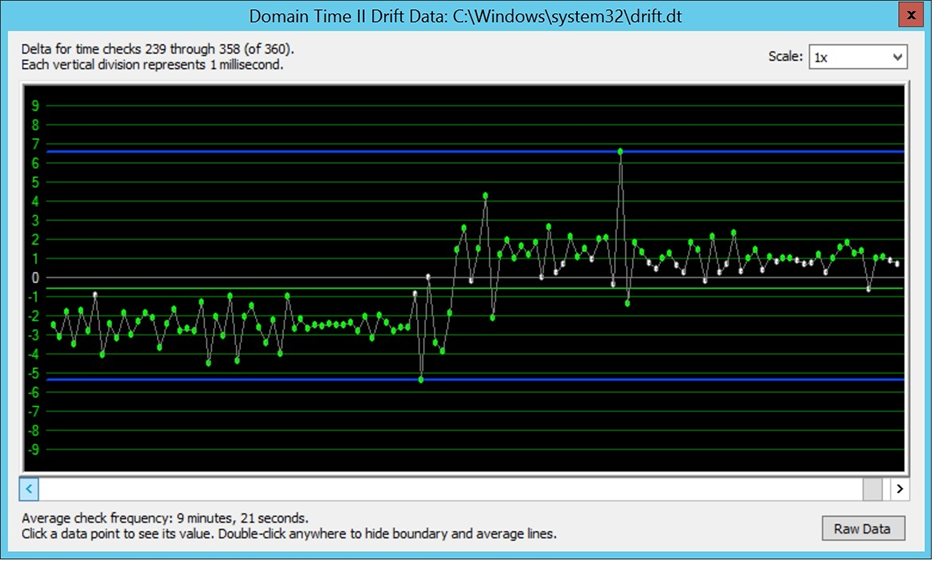

Here we can see the results of software running for a prolonged period and time. This software provides a feature that adapts an ongoing clock difference between the machine and the reference clock on the machine it is running on:

So, now the green line, which is the average time difference being achieved, is much closer to zero; the level of variance is much less, and the number of large differences is also reduced, but they are still present, so this is something that needs to be monitored.

Therefore, it would be best to ensure that the machine has achieved a standard clock accuracy before you move on to running it in a production environment.

Packet Loss

Packet loss is another challenge that would need to be considered when operating in a cloud environment.

We have found that the amount of loss you experience does vary between different regions of the provider and could be attributed to both the age of the datacenter installation and the load that the hosts in the datacenter are under.

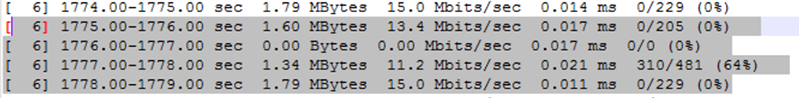

We ran some standard iPerf tests between instances running in the AWS US-EAST-1 region, and the type of loss that we experienced was most pronounced when we were using 8K packet sizes.

Here you can see the way in which the packet loss manifests itself. We get a drop in the throughput, then a complete absence of any traffic, and then an attempt to catch up, which then generates the loss in packets:

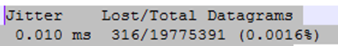

The total sent and loss for this 24-hour test was 316 packets lost out of a total of 19,775,391 sent. Whilst this may not seem like much, it is the way in which the loss occurred that was problematic, as we had a one-second period where no packets appeared to flow:

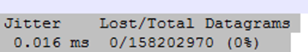

When we reran these tests using a 1K packet size, the loss either disappeared (so no packets were lost when 158,202,970 packets were sent), or the number was the odd one or two here or there, which is fixable using mechanisms such as forward error correction.

That brings us to the end of this rather long post. We hope that some of the information found here is useful and helps you make a more informed decision on whether virtualization or the public cloud, or a hybrid of the two is a good fit for your plans.