Cinegy Air 14 - What’s New

Reading time ~18 minutes

Introduction

Cinegy Air 14 has been released and is ready for evaluation, go-live testing and production use! We’ve added a fantastic number of enhancements since v12, and I am happy to recommend that people start using this version of the product – although, of course, mission critical workloads should follow suitable deployment plans in case of any problems. I’m proud of the team that has worked so hard on version 14, and I can honestly say this release has again set a new bar in quality and stability for a ‘point zero’ version.

As we did with the last major version, this post helps outline what’s new and why customers should care about this release. It also helps people understand why it’s worth keeping up-to-date with new builds – since not only do we add new features, we also fix defects and make sure that software continues to work on updated versions of Windows. Keeping up-to-date with security patches and releases on the hosting operating system is a critically important task, and we work hard to make sure each fresh release (and point-release!) is tuned to work well on whatever updates have happened since the previous deployment.

Updates are a chore, but this continuous approach to vulnerability mitigation does mean that choosing a software platform like Cinegy for mission critical business tasks should always be more secure than some proprietary lump that hasn’t taken a firmware update in 2 years!

This release contains a great deal of internal changes and performance optimizations, further reducing the Cinegy Playout engine resource consumption and featuring an end-to-end GPU pipeline that allows content to remain in GPU from start to finish during composition.

However, this post is about details! As a warning, this list is not exhaustive - and is not intended to replace more specific but dry release notes - but serves to allow us to cherry-pick and highlight what we think are the key functional changes and enhancements made since the last major v12 release.

And yes, we did skip unlucky version 13…

Major Changes – "TL;DR section"

Below are headline "major" category changes in Cinegy Air 14 which are supposed to have the most significant importance for customers:

-

8K support – via IP and SDI (e.g. BMD Decklink 8K Pro)

-

SRT-encapsulated IP input and output

-

More performance optimization for Cloud + GPU (up to 50% less CPU)

-

WDM and WebCam input devices as sources

-

Item Type customizable color categories

-

Playout engine as a Windows service

Other Significant Changes

Below are some other changes, called out due to their potential impact on installation or the secondary benefits they might bring and warrant inclusion in the "must read" section.

-

Audio fading added to auto-fades in items

-

Cinegy Titler scenes now supported as primary items

-

HEVC 10-bit B-frames (with NVIDIA RTX series)

-

SCTE 104 SDI signaling

-

PowerShell as an easy-to-customize Event Manager event.

-

Cinegy Type is now EOL and not officially supported inside Cinegy Air 14

-

NVIDIA RTX and other Turing-generation GPU boards no longer support interlace H.264 encoding. This applies to all versions of any Cinegy software supporting GPU encoding and is a hardware limitation of the NVIDIA boards. Pascal and earlier boards are not affected.

More Details on the Updates – "The long read section"

If you have read this far, then you are interested in details, preferably with pictures and data points. So let’s dive into some of these changes with a little more purpose and discuss these enhancements.

8K Support

Cinegy Air 14 officially introduces 8K output in a production, shipping version. We’ve been demoing 8K at tradeshows for 4 years now, but it’s been quite a journey to get to the point we can consider this now production ready. It’s a journey that involved waiting for equipment to be invented, creating from scratch a massively-threaded optimized codec (Daniel2) and re-working the whole playout pipeline to be able to sit natively in either CPU or entirely in GPU.

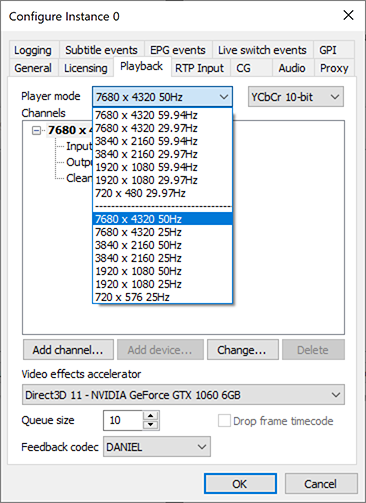

Of course, it’s almost impossible to put meaningful 8K pictures into a post that will be largely viewed online. And enabling it actually involves little fanfare – it’s just a new entry in our output format drop down:

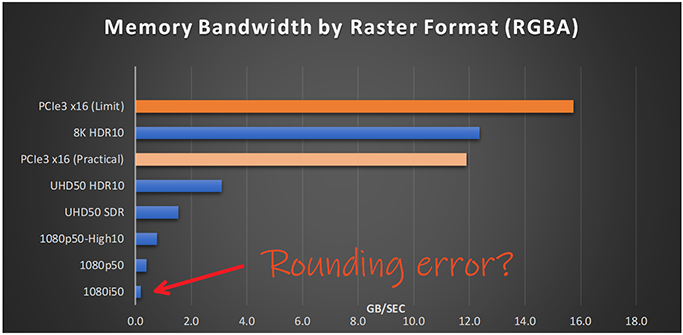

However, the following small chart helps explain the scale of the challenge faced by people trying to work in 8K properly - using at least 50fps with 10-bit HDR color (and to avoid nasty banding that such high resolutions can exacerbate).

These numbers were calculated assuming RGBA, and although use of YUV 4:2:2 can reduce the bandwidth by 50% the relative difference between the formats would remain equal – the key element being that 8K50 10-bit has up to a 64x bandwidth multiplier over what is considered "classic" 1080i50 HD.

We included the theoretical maximum and practical observed limits of the PCIe 3 standard into the chart for reference, and this shows that compression to YUV 4:2:2 is required to allow a PCIe card like the Decklink 8K Pro to operate. Since the available number of PCIe lanes in a PC is constrained depending on hardware, care must be taken to not waste capacity copying data needlessly. Newer hardware is helping to increase the possible lanes available, but anyone looking to work with 8K needs to study both the CPU and motherboard specifications of their machines to avoid disappointment – and make sure that you install cards in the correct capacity slots.

For our part, Cinegy Air 14 now includes the ability to run the whole composition pipeline natively inside a GPU. Coupled with the Daniel2 codec, we can transfer compressed frames at a 10:1 ratio into that GPU for compositing without straining the bus – which is critical if the required I/O for the 8K application needs to be multiple 12G SDI.

Having proven that 8K is not a trivial matter, a reasonable question people might have is what does it take to run this? While this field is still young, and exciting new advances in single-CPU products are starting to ship literally during the authoring of this post – like the new 3rd generation AMD Ryzen chips with 12-cores and PCIe4 – we do have this in production with a customer, so I can share some hardware details from the field.

Format: 8K (7680x4320) at 59.94fps

Codec: Cinegy Daniel2

Machine: HP Z640

OS: Windows Server 2012 R2 Standard

CPU: Dual Intel Xeon CPU E5-2697 v4@2.30GHz, 18Cores

RAM: 64 GB (DDR4 2400MHz)

Graphics: NVIDIA Quadro P4000 (8GB)

SDI Card: Blackmagic Design Decklink 8K Pro

Obviously, this workload helps stretch all aspects of an IT system. The bitrate of the Daniel2 content can peak at up to 3.5Gb/s, which means fast storage access is a must. Also, since the frame size is so huge, the RAM on-board the GPU device becomes critical. It also means that the playout engine must have a reduced queue size for frame pre-caching – meaning storage must not only be fast, but smooth.

Not looking to roll out 8K before retirement? Well, the good news for you is that the effort taken to make 8K work helps make more standard HD workloads easy by comparison – kind of like how an Olympic sprinter can handle a quick jog across the car park to get out of the rain. It’s by investing in this 8K work that in some of the following sections we can talk about how much lower the CPU load of Cinegy Air 14 can be in optimal scenarios when running more traditional tasks.

In conclusion, with a decent IT budget, careful planning and possibly a change of underwear it is possible to roll out an 8K system today with Cinegy Air 14. You just need to explain to your boss why you need to buy some new 70-inch Sharp 8K TVs…

SRT Encapsulated IP Input and Output

While working back in the more conventional world of broadcasting, where HD is still considered something high-end (rather than just the starting point of a graph), one business requirement that is not going anywhere soon is moving signals from one place to another.

With the increase in public cloud adoption, and an ever more well-connected world to report from or hold events in, the arrival of an open technology that people can utilize for moving signals around could not have been better timed. For those of you that are not aware of what SRT is, I encourage you to jump to the SRT Alliance (of which Cinegy is a member) website and learn a bit about it:

For those that don’t want to follow a link, in a nutshell SRT (which stands for ‘Secure, Reliable Transport) is a protocol to allow error-free, very low-latency connections over imperfect networks, and is available as an open-source library that anyone can include in their products.

Why we at Cinegy are jumping up-and-down happy that this is available is because for the first time there is a way such a capability can be added to our products that can just work with others. Sure, the concept behind SRT is nothing new – and some high-quality products exist that fill this space. But now, anyone can turn on these features baked right into Cinegy Air 14 and backhaul a stream from AWS or Azure via the public Internet back to their premises. It’s built in and has no cost element – if you have a copy of Cinegy Air under SLA, you’ll just be able to update to Cinegy Air v14 and use it.

Since we integrated this into our tools, we’ve never looked back - even for internal use. No longer do we need to have all workstations in the office connected into the dedicated video network if we want to pull up the output from some machines in elsewhere – SRT removes the need for it. Anywhere you could previously send in / out an MPEG2 Transport Stream within our products, you can now also do with SRT as the wrapping envelope.

It’s hard to explain quite how much this changes what you can do in words, so we’ve made a small GIF that shows how to take a Cinegy Air channel, and how to add an output stream of H.264 in "SRT Pull" mode – finishing with then making good old VLC connect up to the stream:

While the GIF shows a "pull" of a stream, you can also set up a "push" (or "Caller mode", in SRT speak) to pro-actively send out the stream to anything sat waiting to catch one. An area this suddenly makes much easier and neater is inside the public Cloud – no longer do you need to carefully balance where you unicast what; set up SRT on the Cinegy Playout engine to allow clients to pull a stream ("Listener mode") as they need it.

You can look forward to further SRT enhancements in the next point-releases of Cinegy Air, as well as being integrated into our other products. We’ve not yet exposed any parameters and options for people to adjust; it’s pre-set into "it-just-works mode" – but we will add more knobs and dials to allow people to control encryption, latency and bandwidth in coming releases.

More Performance Optimization

As eluded to in the above 8K section, we’ve once again made more performance increases with the Cinegy Playout engine. With the introduction of Cinegy Air 12, we moved the compositing stage of the playout pipeline to optionally reside within the GPU – which had great benefits for customers that wanted to use our integrated branding system for adding graphics to the output, or were using the very convenient and efficient NVIDIA "NVENC" H.264/H.265 encoding blocks, since we could neatly combine these things and copy frames around inside the super-fast GPU memory.

However, our studies have shown that as people pushed the boundaries of the engine, the computation overhead of taking source material decoded within the CPU (or worse, decoded by the GPU and then handed to the CPU to just send back to the GPU) was becoming a limiting factor. To reach the dream of 8K60 at 10-bit, we knew we needed to cure this. As a result, we created a way to connect the input stage of our media layer to the compositing stage without moving over the CPU at all, performing any technology conversion (e.g. CUDA to DirectX) or color-space conversions (YV12 to YUV2) now within that interconnection.

Here is another lovely GIF showing the resulting change in a visual way – each example performing the same base tasks, running on an i7-6700 with a GeForce 1060 GTX:

– Playing a 4:2:0 H.264 Long-GOP clip in 1080i50

– Creating an NVIDIA encoded H.264 5Mbps IP stream

– Rendering a static channel ident

Obviously, when you need to do 64x more work than HD to make 8K work the way it’s needed, you need to get those graphs down so low you can barely see them!

A couple of words of warning though – before people go rushing off to consolidate too much onto a single machine.

-

Please be conscious of GPU RAM; having the decoded content run and stay in the GPU means that the "queue" needs to hold frames in the GPU – you might need to reduce the default queue size from the relatively massive 150 frame default to something a bit more respectful of GPU RAM sizes (e.g. 25).

-

Don’t go loading up more channels than you have CPU cores; the engine still needs some CPU power to perform house-keeping, and sometimes engines need to do some activity as a high priority on CPU – if no CPU core is available, you can get drop-outs.

However, using this knowledge, you should be able to get much more life out of existing equipment – maybe with a GPU update for a few hundred dollars. Remember though that not all codecs and containers can be decoded within GPU – if you decode something that is only supported by CPU, then you’ll see performance similar to the Cinegy Air 12 engine.

Accelerated codecs / container combinations are:

-

H.264 (4:2:0 only) – IP or elementary stream and MXF container

-

H.265 (4:2:0 only) – IP or elementary stream only

-

Daniel2 (4:2:0, 4:2:2, 4:4:4) – IP or elementary stream and MXF container

We look forward to SMPTE completing their work towards supporting H.265 inside an MXF container, so we can add this option.

WDM and WebCam Input Devices as Sources

We’ve always worked hard to offer a great cross-section of board support within our products, but there is always a newer board, or a board with some extra features someone wants to work with that we don’t support natively.

Now with Cinegy Air 14, we’ve added a generic wrapper which will allow people to use boards previously unsupported by Cinegy with their engine. This works by using the Microsoft WDM (Windows Driver Model) framework to access a board as an intermediary. You might have seen this as the mechanism by which something like a Blackmagic Decklink board becomes available to an application such as Skype. This model also opens up a world of "virtual device" inputs from other software – if you have another application that presents as a WDM device, you can now bind that to an input in Cinegy Air.

When accessing a board via WDM, Cinegy Air will attempt to get the requested raster format from the board through the WDM interfaces, so that the input video has the highest quality in the application. For several cases, this can prove absolutely perfect for requirements, so why do we still bother to support any boards "natively"? Well – WDM is not without limits. Using that framework, we can’t directly configure features of a board - for example, to choose what ports to enable on an AJA board. Neither can we access extra capabilities – such as reading timecode and sync from an LTC port. Finally, we have less control of that board in general, so we can’t support a board via WDM as a 24x7 mission critical component; we test in the lab specific supported boards and make driver and firmware recommendations to achieve that level of confidence.

But if you are in a hurry and can accept these limits – you now can.

An offshoot of this work is a far more fun improvement, which, while it might not be generally useful for people in a proper broadcast environment, is a great benefit for people performing training, testing, or demoing our software – webcam support!

When you pick a detected webcam, Cinegy Air will negotiate with that webcam to work out what the best choice of raster and framerate is to synchronize with the configured channel (note: turn on full logging in Cinegy Air and take a peek at what we dump to the logs to see what your camera can do).

With a webcam configured as an input, you now have a readily available, low-latency source of video to use for whatever you wish – here is another GIF showing the set up and use of the camera with Cinegy Air set to jump directly to "live" on startup:

Item Replacement & Type Color Categories

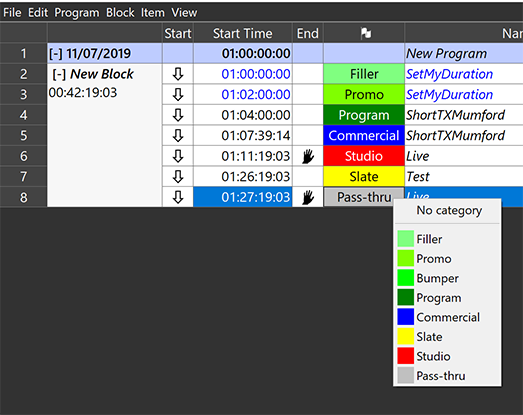

Switching to the operators rather than the core engine, a couple of call-out improvements in Cinegy Air 14 involve labelling and replacing items in the playlist. We’ve introduced the ability to flag items in the playlist with customizable color categories, helping to make it easier to glance at a playlist and see what types of content are within the grid.

We’ve added some sensible defaults to the list, but you can set them to anything you want within the configuration panel. If you are using automation to set these values, you can print anything you want into the source XML and the Cinegy Air grid will show what you entered – as a user, you can then click to import any values that are displayed in the list, in case you want to re-use them.

Additionally, we’ve improved the way you can replace items inside the panel. If you hold down Ctrl while drag-replacing an item, you are now presented with a nice dialog that helps you consider how you want the item replacing. It was possible to do some of this before, but you needed to remember if it was Ctrl or Alt to work out what was going to happen… now just use Ctrl and then review the dialog box – like below:

Playout Engine as a Windows Service

For some loyal customers that discovered Cinegy a long time ago, you will recall that once upon a time it was possible to run the Cinegy Playout engine as a Windows service. This was lovely – you didn’t have to worry if a machine was rebooted and someone had to log back in (or setup some account to auto-login). However, with the introduction of Windows 2008, Microsoft made it technically impossible to continue running as a Windows service and still access the GPU with a feature called "Session 0 Isolation" (note: it’s fun to Google that term and see an amount of unhappy people venting in forums at the time this was introduced).

We tried hard to soften the blow by introducing Playout Dashboard in the system tray, which is actually a really nice way to interact with the engine while configuring and experimenting.

In the intervening years, Microsoft re-enabled support for DirectX and NVIDIA enabled support for CUDA from within a Windows service (Windows 8 / Server 2012 onwards). However, OpenGL has still not been re-enabled – so, rather than wait (possibly forever), we re-created our branding engine on DirectX and removed all OpenGL from the product.

Now we have formally ended official support for Cinegy Type in Cinegy Air 14, we’ve re-introduced the ability to configure the engine to run as a Windows service again. But we did it in such a way that people can keep working interactively (and enjoying the dashboard), whilst also pleasing sysadmins that prefer to work with automation and scripts. From Cinegy Air 14 – as well as Cinegy Air 12.3 when it ships – we have added a PowerShell script that helps people choose to install the engine as a Windows service.

Using this script, you can convert all configured Cinegy Playout engines to a service and inhibit the dashboard from starting a fight with the services. We’ve posted the actual script to GitHub in case people want to adapt this script to configure services in a different way:

You’ll find the script in the "support" folder when you unzip Cinegy Air, along with a classic BAT file you can use to start it:

The script can be used to create the services, but also stop and remove all services should you wish to go back to working with the Dashboard.

Working this way is not only more secure, it also helps keep loading on the servers lower; the Dashboard generated a reasonable amount of extra load, particularly over remoting tools. With the combination of XML-based engine configurations, you can configure and run a playout server without logging into the console at all – check out the example PowerShell-based configuration template script also on our GitHub page:

There are some things to remember when running as a service though:

-

Don’t forget that you can’t use any legacy Cinegy Type scenes (even if they are embedded in sequences!)

-

Your service will need to have permission to any network storage – either by running the service as a specific privileged account or by granting the computer account access to shares.

-

The Dashboard will refuse to start with a pop-up, and if you leave it in the start-up folder you will get that pop-up any time you log in (but it’s harmless to just click through).

We hope you are glad to have services make a welcome return and would encourage you to review these scripts and adapt to your needs. Don’t forget about the "Unattended-Install" script also in the "Support" folder – it’s a nice timesaver on machines that want a default install. And in case you are wondering – the BAT file equivalents of the PowerShell scripts are just an easy way to let you choose "Right-click → Run as admin" to get the PowerShell launched correctly.

Closing

Cinegy Air 14 has now been released – so, while last year we recommended people grab a laptop and make your first HD channel, now this year why stop at HD? With our improved engine - use that laptop (and a webcam!) for a full UHD channel.

Updated 19th July 13:51: This article was updated for the purpose of clarification of language and text. A hero image was added.