Cloudy, with a Chance of Broadcast

Reading time ~15 minutes

This post is a slightly amended version of the presentation that Mike Jacobs gave during Cinegy’s Technical Conference 2021 which was live-streamed on March 25th and is now available to view as a recording.

Cloud Broadcasting

In previous years, talking about broadcasting from the cloud has been something of an oxymoron.

People were not sure if even simple broadcasts of a single channel could be run from there.

But it is no longer a question whether it is possible to run channels in the cloud.

In today’s world, the question is most definitely what people want to run in the cloud, and the Cinegy software forms a large part of that answer.

Cinegy has been enabling delivery of broadcasting from the cloud for a few years now and has customers, who are running anything from a couple of channels up to complete end-to-end workflows there successfully.

During this time, we have learnt a few things along the way and so today, we are going to cover a few topics which we feel can still generate questions from customers and hopefully provide some information which will be useful.

Firstly, we shall cover what is a cloud deployment using Cinegy? What does that look like and how does it behave?

Next, we are going to move on to talking about tools, as there are lots of them out there which can be used with cloud deployments. We are going to cover ones which we have found useful for repetitive tasks and monitoring of workloads.

Finally, we shall talk about some areas which aren’t necessarily given the attention they should and can cause issues further down the road.

Cloud Deployments

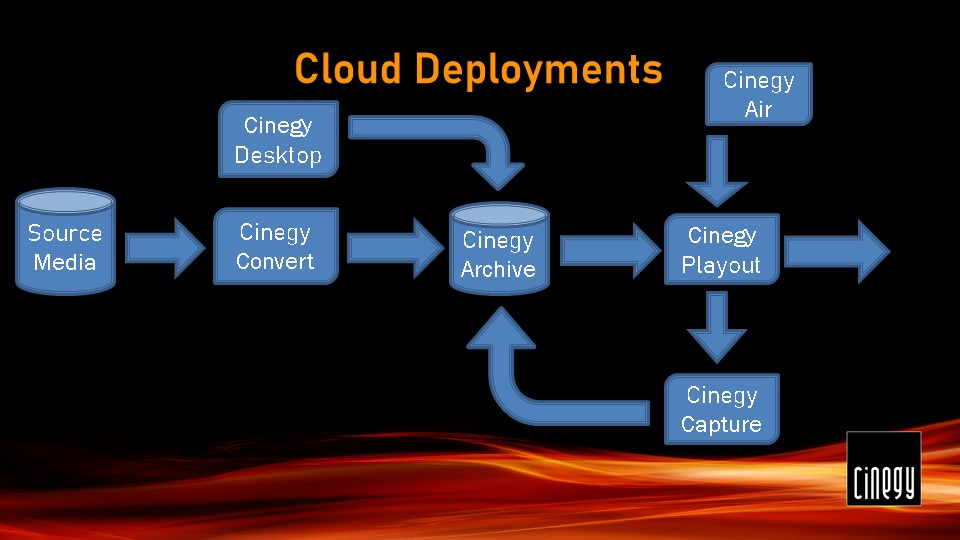

"So, what is a cloud deployment using Cinegy?" you may ask.

Well, a deployment can vary from a single pop-up Cinegy Playout engine, used for special events, all the way up to a complete product chain running from ingest using Cinegy Convert, all the way through management and editing with Cinegy Desktop and Cinegy Archive, to it being played out with Cinegy Air and recorded with Cinegy Capture for Video on Demand workflows.

Now obviously, this is just a diagram showing pieces of the Cinegy software. But how does that relate to the cloud?

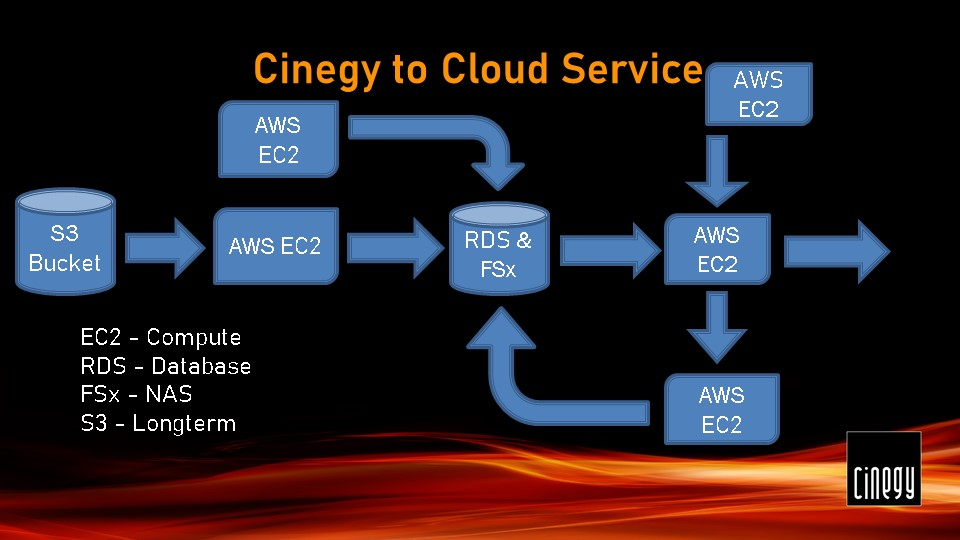

Mapping the various blocks of the Cinegy software onto some example cloud services shows that for what may seem a complex deployment can actually be reduced down to only needing four different capabilities.

We have taken AWS services for the example, so in no particular order we can see that we have EC2 providing the compute requirements, RDS is delivering our database, FSx is acting as the network shared storage and, finally, S3 is our long-term media store.

This is only an example, the S3 bucket could be replaced with any source of original media and Cinegy Archive could also be running on a compute instance.

One of the areas which we at Cinegy get asked about frequently is - What size computing instances do we require to run specific playout configurations in the cloud?

Here are some examples of the types of questions we get:

Further on we will give you some examples of playout configurations with related AWS instance sizes and loadings.

Obviously, to carry these load tests we need to use a configuration for the Cinegy Playout engine and there are lots of options here. We have chosen a config which is representative of a cloud playout and this is going to be the base config which will then be adjusted as necessary with any changes called out. Let’s quickly run through this config:

We have an instance name set and under "Licensing" we have simply added the "Cinegy Air Pro" control option. Moving on to the "Playback" tab we have one channel with a single IP output added. We have selected the available NVIDIA GPU to be used as Video Effects Accelerator and set a buffer of 50 frames in RAM. The feedback video will be encoded using Cinegy’s Daniel codec.

The single IP output is running in unicast mode and it will act as a listener for SRT on port 6001. There is no need to set a passphrase to encrypt the SRT output. Running the engine in this mode allows us to reach out and request a stream, and we only need to worry about opening network ports on the firewall in this environment.

We will neither create any custom output rasters nor change any of the default transport PID settings.

We have chosen to encode the output using H.264 offloaded to the NVIDIA GPU. This will be encoded as a bitrate of 10 Mbps with the various GOP structure set to IBBP and the various other settings as seen in the picture.

We shall also include a single stereo pair which will be encoded as MPEG layer 2 at a bitrate of 192 kbps. Finally under the "CG" tab we shall enable the Cinegy Titler engine to allow any graphics branding to be utilized.

Some Testing

Now that we have the starting engine config, we can take a look at how many HD channels we can run on the smallest of the older AWS G3 instance types.

We shall start with 1 simple HD channel with no graphics and review the CPU and GPU loading on the instance. Then we shall increase this by adding 1 channel at a time and take a look at the extra load.

Before we turn on any Cinegy Air engines, let’s have a quick look at how the machine runs at basically idle. You can see that the CPU load is around 10% on average and the GPU is just running along at about 6%.

1 HD Engine Running

Taking a look at the loading on the AWS instance, we can see the CPU utilization is hovering around 30% and if we move over to have a look at the GPU, we can see that the video encode is averaging of around 18%, moving up and down a little bit either side.

Please bear in mind that these figures are being affected by our RDP connection into the machine and having to render the graphs and similar that we are viewing.

2 HD Engines Running

Having moved up to 2 HD engines running with 2 simultaneous HD outputs, we can see that the CPU loading has increased to on average 50% utilization, and moving over onto the GPU, we can see that the video encode has gone up to on average about 30%, so not quite a doubling of load.

3 HD Engines Running

Here we are again now with 3 channels running on this machine and we can see that the CPU load is hovering around 73-74%. It’s getting quite high now and probably going to prevent us from adding any more channels to this machine. The NVIDIA GPU is doing well, the video encode is averaging around 40% to 41% utilization.

Outcome

Here are the results:

We started with around 10% CPU, 6% GPU utilization and as we moved up adding more channels, it was the CPU that was topping out at an average of 72%.

The GPU was only 42% and this goes to show that we can deliver 3 simple HD channels from the smallest of the older G3 AWS instances – the G3S.

Could we fit any more engines onto that instance?

We could have probably fit 1 more HD channel out of the instance by carrying out some additional optimizations and moving load off of the CPU onto the GPU.

To minimize our use of the low amount of available CPU, we could have ensured our playout media was encoded as a GPU codec such as H.264 and therefore stayed in our GPU pipeline.

We could have also removed the feedback video from being generated, but it needs to be balanced against operators' requirements. Finally, we could have moved the playout engines over to run as Windows services, which removes the requirement to run the Playout dashboard application.

Newer Better?

That was the smallest of the older generation G3 AWS instances. Could we achieve more with the newer G4’s which are the only ones available in some of the newer data centers?

Possibly, but the challenge here is that because NVIDIA has removed support for interlaced encoding on H.264 in their newer chipsets, we need to move to a progressive output.

Will this offset the newer hardware in this instance?

We have repeated the same process as before of adding engines one at a time. We could achieve more with this instance even with a move to a progressive output.

We started with a baseline a bit lower than the G3 – 3% CPU and 1% GPU utilization, and as we increased up to the 4 HD channels, our CPU moved up to about 83%, which is slightly higher than we had on the G3, but still an acceptable load.

The GPU was averaging around 54%, which again is an acceptable load on that GPU, and these are good results, and they do show the benefits of using the newer hardware platform.

But don’t forget that this is still the smallest of the available G4 instance sizes!

Here we have the AWS G4 instance. Currently we are running 3 HD channels in 1080p50 with 3 SRT outputs connected, and as we can see - the loading is actually not too bad. The CPU is averaging about 60% load and the GPU, which is the newer Tesla T4, is averaging about 40% load on the video encode.

Here we are with 4 HD streams running simultaneously from this G4 instance, and here we can see the CPU is averaging about 80%, holding strong, and if we look at the NVIDIA card - that itself is running the encode load at about 54-55% on average, which is pretty good.

High Definition with Graphics

What about when you add graphics into the output?

Simple elements, such as ratings and logo bugs using image files, will not make a noticeable difference to the instance load. But what about more complex graphics such as animated lower-thirds?

When the graphic kicks in, the GPU load goes up to about 18%, and the CPU load goes up to about 29 to 30%.

Once the graphic is finished and clears, the GPU is back down to 13% as before, and the CPU is dropped down to about the average 20-22%.

We see a load increase on the machine when the lower third animated graphic ran. When the graphic engine was first initialized, the CPU load went up to about 42%, but then reduced back down to 30%, whilst the graphic ran. The GPU load goes up to about 18%, before reducing back, once the graphic cleared.

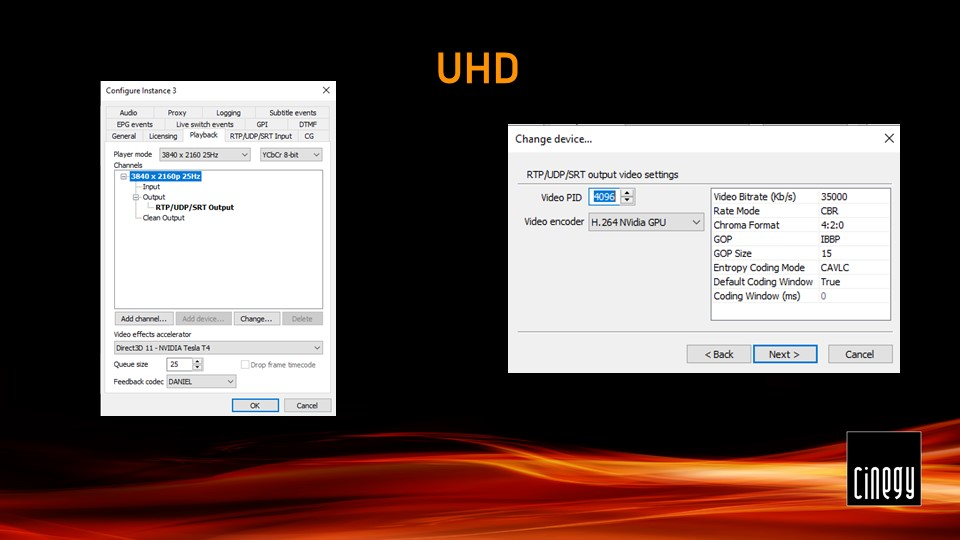

UHD

Now we come to UHD. Is this going to be a challenge to deliver?

Here are the changes made to the engine config. We simply moved player mode up to UHD and increased the output bitrate to 35 Mbps, still as H.264.

Here we have the G4 AWS instance playing out a single UHD channel, and at the moment you can see the CPU load is going up and down around 30% and the GPU is staying around 14% utilization.

The CPU load now is reducing down to 16-18%, whilst the GPU load has increased slightly. This is because there are 2 different encoded video clips being used in the playlist. One is encoded using ProRes and the other is HEVC, and as it moves between those 2 different encoding methods, the loading on the machine will vary accordingly.

So, the answer is a simple "no". With the sample media files loaded into the playlist, we see the CPU load going up to a maximum of 60% and the GPU load to a maximum of 18%.

As mentioned before, there were two different files which used two different codecs and this is why we saw the variance in the CPU and GPU loads.

And don’t forget that this UHD channel was still running on the smallest of the G4 instance sizes available.

Hopefully, you will find those numbers and examples useful and they demonstrate once again just how efficient and flexible the Cinegy software is.

So you can trust us when we say that it is possible to run more than 1 UHD channel on a single instance.

Tools

Therefore, let us move on now to talking about tools and which ones Cinegy has found to be of great use.

The 2 areas we are going to talk about are automated deployments and that old classic monitoring.

Migrating workloads to the cloud requires testing and investigation. You have to run the same tests in different configurations in an iterative manner. Having a development environment and a staging environment are good practices and being able to quickly create and delete them to allow fresh testing is extremely useful.

Whilst the cloud providers have their own automation services, we always prefer an agnostic solution and Terraform is a brilliant one.

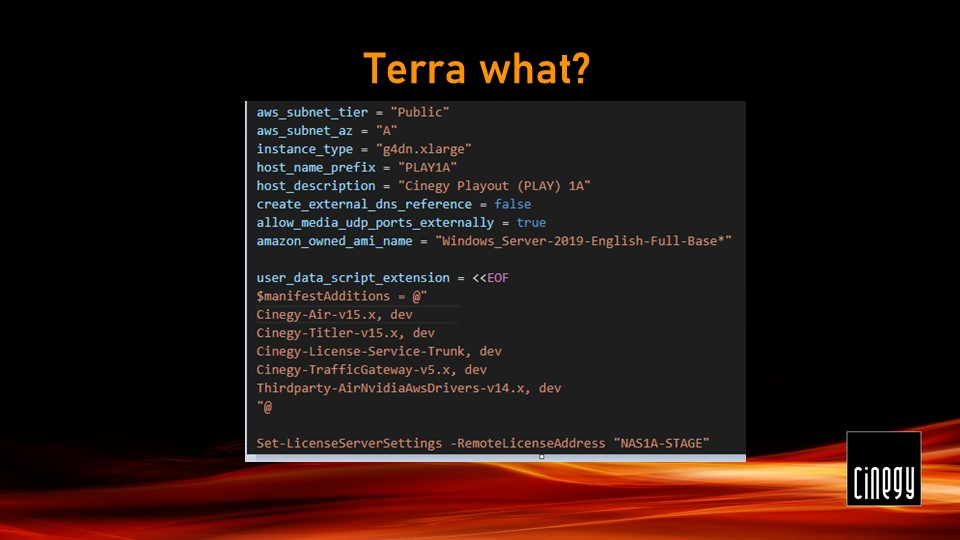

To quickly quote their website, "Terraform is an open-source infrastructure as code software tool that provides a consistent CLI workflow to manage hundreds of cloud services."

Terraform uses various types of files to contain the information needed to deploy the elements which make up your environment.

Here is a sample of the contents of one of the files used in Terraform. Here you can see how we are defining the G4 instance type which will be deployed into the subnet, given a name, and we use the Amazon Windows Server 2019 image as a starting point.

Terraform allows you to document your infrastructure as a code and keep this information in a version-controlled repository such as Git. By documenting your environment using these tools, you can easily create them with one command and delete them with another one. This is because Terraform keeps track of what it has deployed and this is especially useful in cloud environments. No longer do you have to remember in which order you have to deploy certain services and that you need to create an Active Directory before you can create your database.

We have used this tool many times, and it is our default way now of deploying anything into the cloud. In fact, we have a video on the Cinegy YouTube channel showing how to go from nothing to 150 playout channels in 10 minutes!

Monitoring

We are moving on now to monitoring. As already mentioned, while showing the resource utilization on the instances, the act of connection produces additional load on the machine.

We at Cinegy have talked before about big data and analytics, and these once again prove invaluable to not only providing real-time information about the instance, but also historical information to spot trends.

Using Metricbeat to deliver data into an Elasticsearch system and then using Grafana to visualize this is a great combination. And keep in mind – it’s free to use!

What does this magical combination of software create for us?

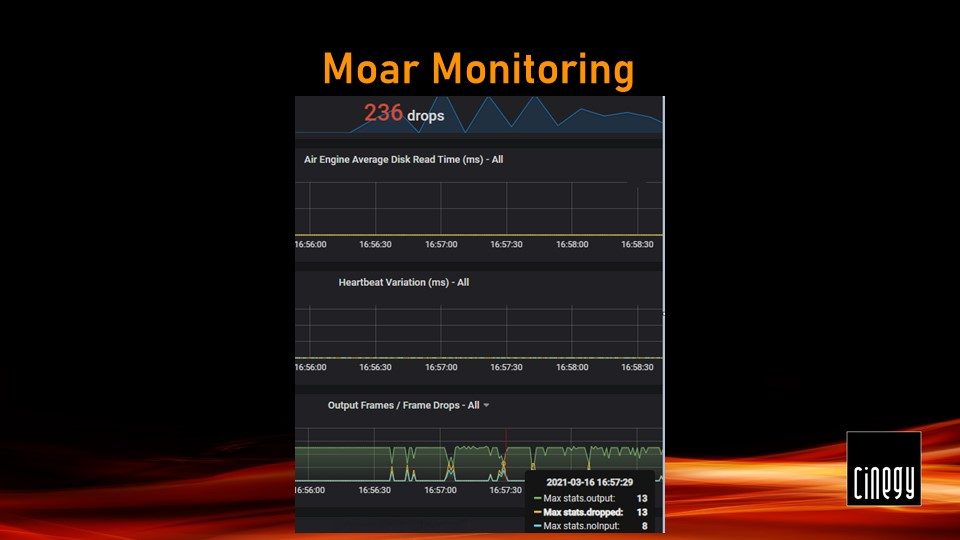

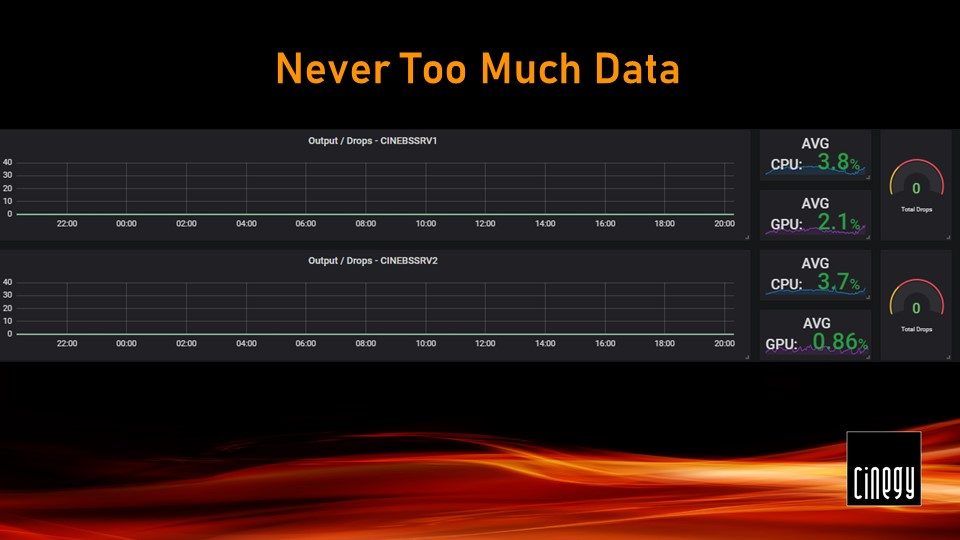

This image shows the types of dashboards you can build with this data and shows the insights you can get into how your system is running. Here we can easily see how our GPU is handling the load across the top, and if we want to get some more detailed information about the CPU etc., we can hover over any of the data points on the graph to see point in time statistics.

Air Metrics

"Can we get more?" you could ask. Well, yes, in fact you can! With Cinegy Air v21.2 we have added metrics which we believe are useful and can be queried and sent to Elasticsearch.

Taking these statistics, we can create graphs which show information where we can see if we have had any drops of output frames, reported along with any variations in the system timings and if we have any delays accessing media.

Then we can combine the information from both Cinegy Air and Metricbeat and this can give us a complete picture of both the machine and software.

This way we can correlate if we get an issue with what was happening on the machine at that point in time, which is invaluable when you are dealing with an environment, such as the cloud, where you do not control the underlying infrastructure.

That was a brief take on the tools which we found to be invaluable in our time with the cloud.

Let’s finish off with some time spent on consideration.

Consideration

What are some things which you need to think about when you are using the cloud for broadcast?

If you are looking to achieve maximum density and therefore least cost per channel of playout, then you need to think about:

Source media encoding. Try and have a fixed in-house format which is GPU friendly. You get the same GPU capabilities in a G4 instance type from the smallest xlarge at say 84 cents per hour through to the 16xlarge at over 8 dollars.

Graphics. Be efficient in how you build your templates. Don’t use full-sized raster plates to put a bug in the corner. Don’t use full raster videos to put an animated lower-third on.

Hybrid deployments. Don’t be afraid of keeping some things on-premises. It can be useful when needing to convert signals back to baseband or using our pseudo-interlaced mode in Cinegy Air 21.2. When you want to use one of the newer NVIDIA GPUs in the cloud, we need to convert that signal back to interlaced when you get back to your delivery premise.

Split the load. You can use all our software in client/server mode which means you don’t need to squeeze playout engines and the operator software on the same instance. You could even run them on-premises if needed.

Be mindful of the uptime/availability statistics on cloud services. We have seen AWS FSx become unresponsive for 20 seconds whilst a network reconfiguration occurred. You need to plan for failure when you’re working with the cloud.

Unforeseen costs. Make sure you are tracking your costs for cloud usage and are aware when you need to pay to get things either into or out of the environment. If you are using remote operator machine on-premises, then delivering the confidence streams to them will cost money.

So, just a couple of reminders now to finish. If you need help when you’re looking to make the leap, then the Cinegy Professional Services team are here to help, and if you’re now keen to get started, simply search for Cinegy or Channel in the Cloud on the marketplaces in the cloud.