What's New in Cinegy Multiviewer 22

Reading time ~12 minutes

Introduction

We wanted our previous Cinegy Multiviewer release out ASAP so much that we had almost no time to write a traditional "What’s New…" post. And finally, we are back with the new and shiny Cinegy Multiviewer 22.10 release!

The previous release has had a fair number of bugfixes and improvements, some of which include introducing a fresh GUI look, supporting cinematic framerates and non-48 kHz audio feeds.

Some of the updates, like significant GPU performance optimizations, have been announced quite a while ago and you have seen it at Cinegy TechCon, on our YouTube channel, or in Cinegy Multiviewer 21.11 as an early beta feature.

Now with the Cinegy Multiviewer 22.10 release, we gladly have these changes at their best, tuned up for any use case in an official production release.

Of course, this is not just it – since the last release we have moved to our own AC-3 decoder, supported the latest Ampere series of NVIDIA cards, moved our product to NewTek NDI SDK v5.1.3, and added a plenty of usability improvements.

Also, in v22.10 we have started a gradual process of renovation of the Cinegy Multiviewer web-control, and you may witness the first basic results.

Some of these more complex additions, like this one or the inclusion of SRT telemetry and statistics, may go unnoticed without extra publicity, and summing them up altogether in one place was a must.

Before I go into details below, I’ll start with a bullet list of main improvements.

Remarkable New Features

-

Moving video alerts processing to GPU pipeline

-

Implementing use of GPU for scaling streams on decoding

-

Gathering SRT streams statistics and submitting them to telemetry systems

-

Extending Cinegy Multiviewer web-control API to manage MV outputs and layouts

-

Updating Cinegy Multiviewer’s NewTek NDI SDK to v5.1.3

-

Supporting CUDA 11 and latest generation of NVIDIA Ampere cards

-

Adding a modern high-quality AC-3 decoder

-

Supporting cinematic 23.98 and 24 fps video formats

-

Supporting non-48 kHz audio streams

Now I’d suggest you making yourself a hot drink, relaxing in your chair and let’s embark on an in-depth (and hopefully, exciting) dive into details.😊

GPU Performance Amplification

If you have seen this challenge on our YouTube channel, feel free to scroll down to the next section.

Back in the days when working on the first release of Cinegy Multiviewer 21.11, we challenged ourselves to build a high-density Cinegy Multiviewer server, able to decode and display an impressive 100 Full HD feeds.

For this we used 4x NVIDIA Quadro RTX4000 cards in a 64-core AMD Threadripper workstation. We used our own Cinegy components to generate and send 100 of those feeds over a 10gig link to this "beast" of a machine.

What seemed to be odd – that even with the stunning performance of 4 RTX cards, the CPU load during this experiment turned out to be suspiciously high, while, at the same time, the GPU’s load seemed to be clearly slacking off.

So, where could our resources utilization be improved?

We were confident about our NVENC and NVDEC workflow, so we ruled this out as the potential bottleneck and focused our attention on another component, key to Cinegy Multiviewer’s performance – scaling.

During video frame processing required for scaling, the GPU would decode an uncompressed video frame of 4 Mb of data (for Full HD). This data goes through a number of copying cycles from the GPU to the RAM, then to the CPU just to be passed back to the CUDA cores of our GPU main chip for processing.

The main idea around improvements was about reducing the amount of these data copying operations and concentrating data processing in the Cinegy Multiviewer master GPU pipeline.

In my tests I used the same software setup on three different testing kits. I was using Cinegy Multiviewer 15 as a baseline. I connected it to our Grafana based telemetry portal, with the help of the MetricBeat app.

The idea of my tests was pretty simple – to load my test Cinegy Multiviewer servers with IP feeds to operate at hardware capacity using GPU decoding, take the measurements, repeat the tests with Cinegy Multiviewer 22, compare the results.

TestKit #1 – The Regular

I chose a server with a 7-year-old Intel Xeon chip and an NVIDIA Quadro P2200 board – almost matching the specs from our system recommendations.

The P2200 card can handle up to 24 HD H.264 interlaced streams.

I fed these 24 to the input of my Cinegy Multiviewer 15 as multicast RTP over a 10 GigE LAN. I set all the streams to be decoded by the GPU.

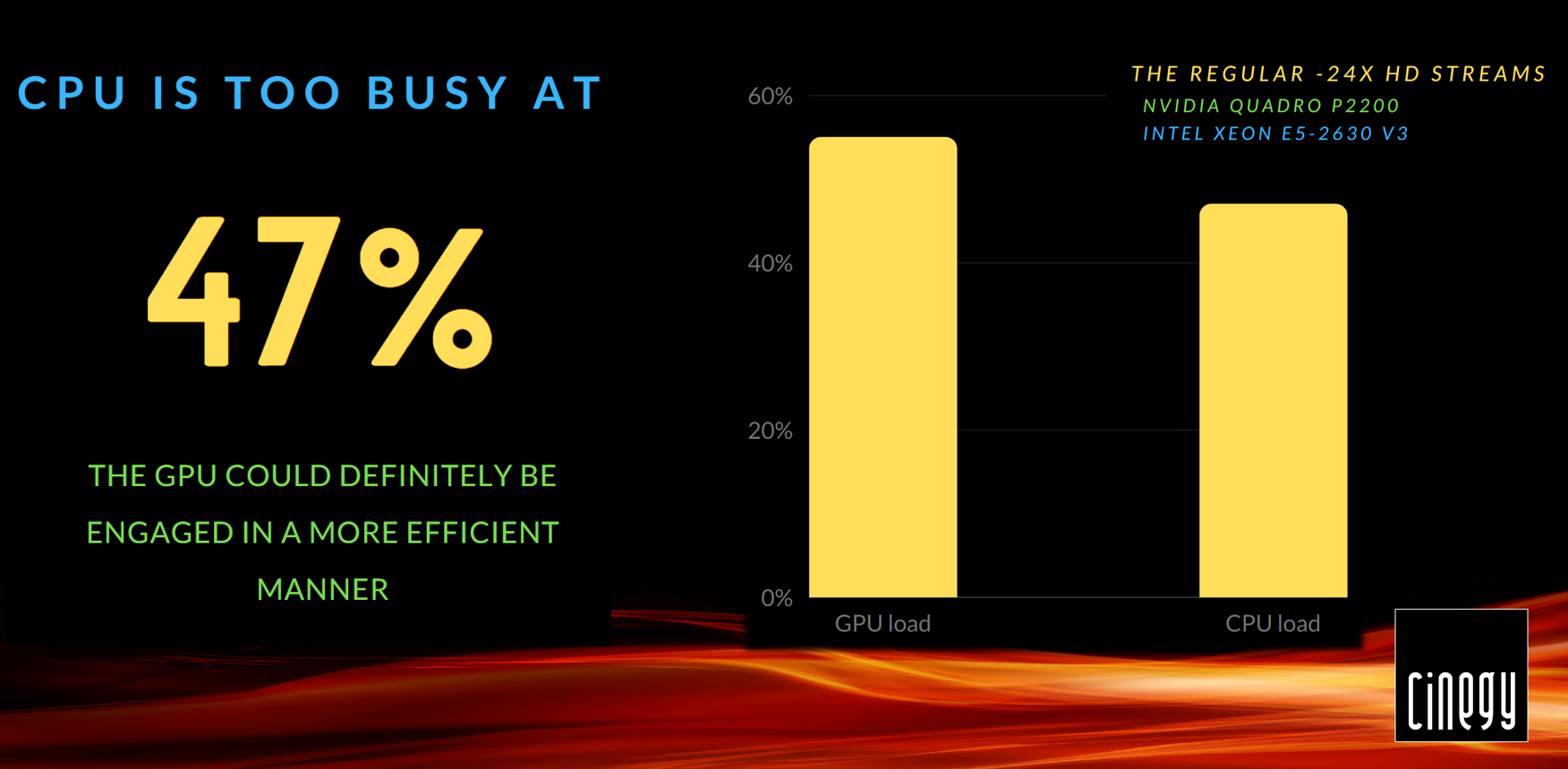

The CPU load in this test fluctuated between 45-50%, whilst the GPU was operating at 55% load, most of which is the video engine load attributed to NVDEC performance. I took a quick look at GPU-Z readings to see that the main chip doing scaling was barely engaged.

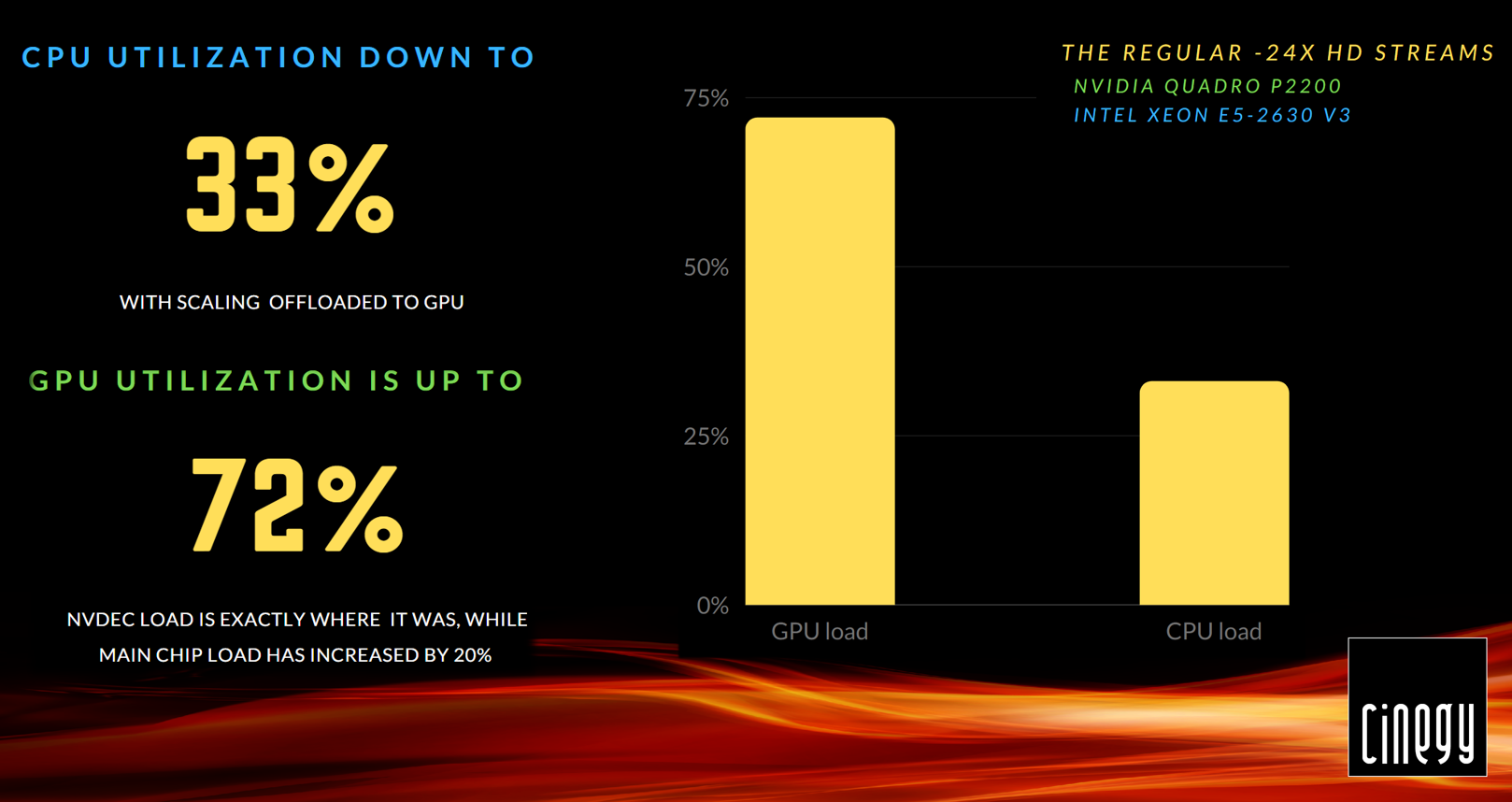

Next step – upgrading Cinegy Multiviewer to the development version 22, that includes our changes to scaling, and voilà – we see the CPU load significantly decreased.

The CPU is now running at 25-30%, while the GPU is now pretty busy at 75% load. Having had another look at GPU-Z sensors, I saw that the general GPU load has increased, while video engine stays pretty much where it was in Cinegy Multiviewer 15. The main chip’s engagement is a clear evidence that our optimization is working!

The CPU load has decreased by half and an extra 20% of GPU utilization – sounds good for starters!

However, these results do not look as impressive on pure hardware: Cinegy Multiviewer 15 scaling simply doesn’t eat out as much CPU on this setup.

What’s going to happen if we try the same on virtual machines in the cloud?

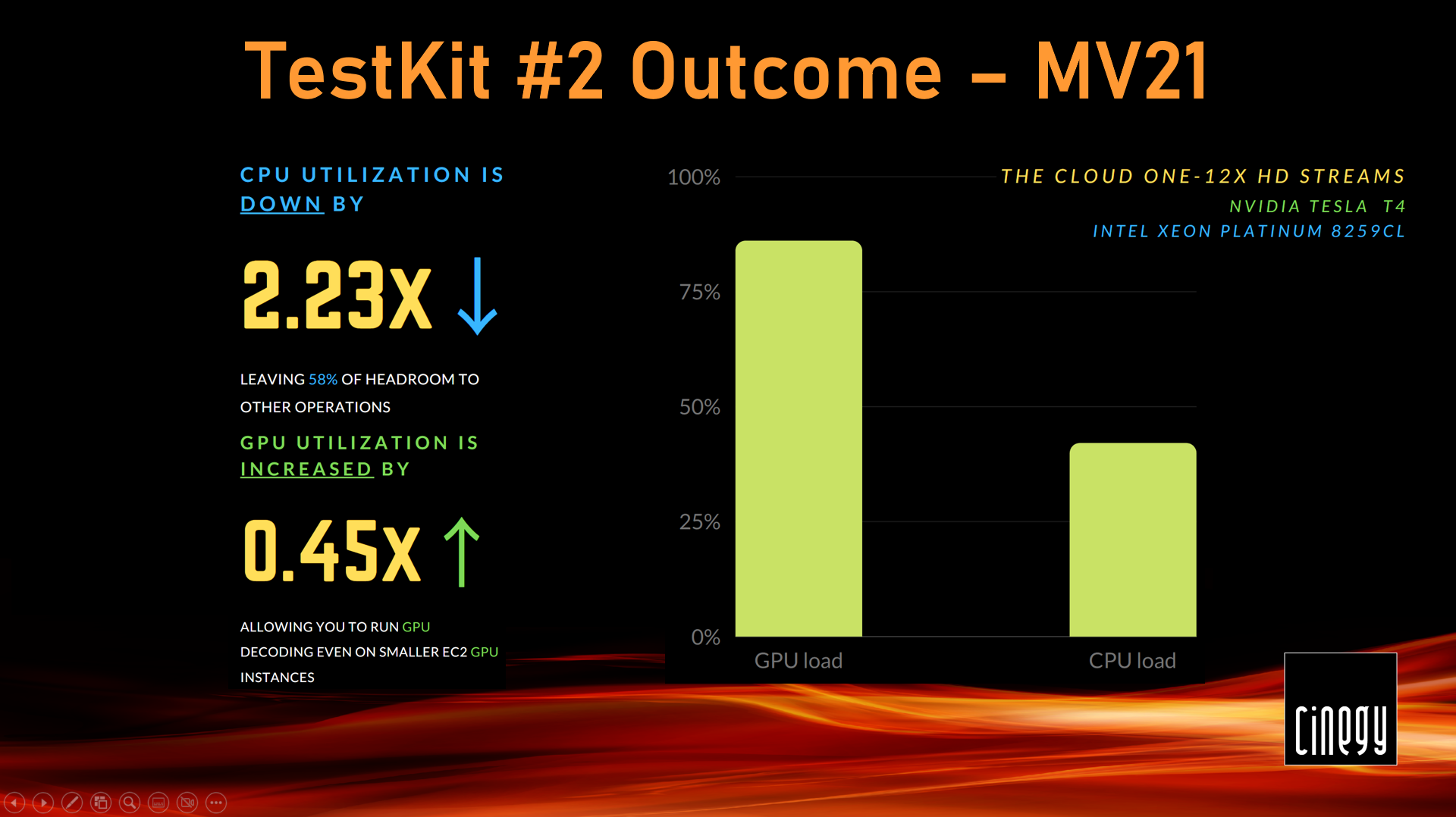

TestKit #2 – The Cloud-one

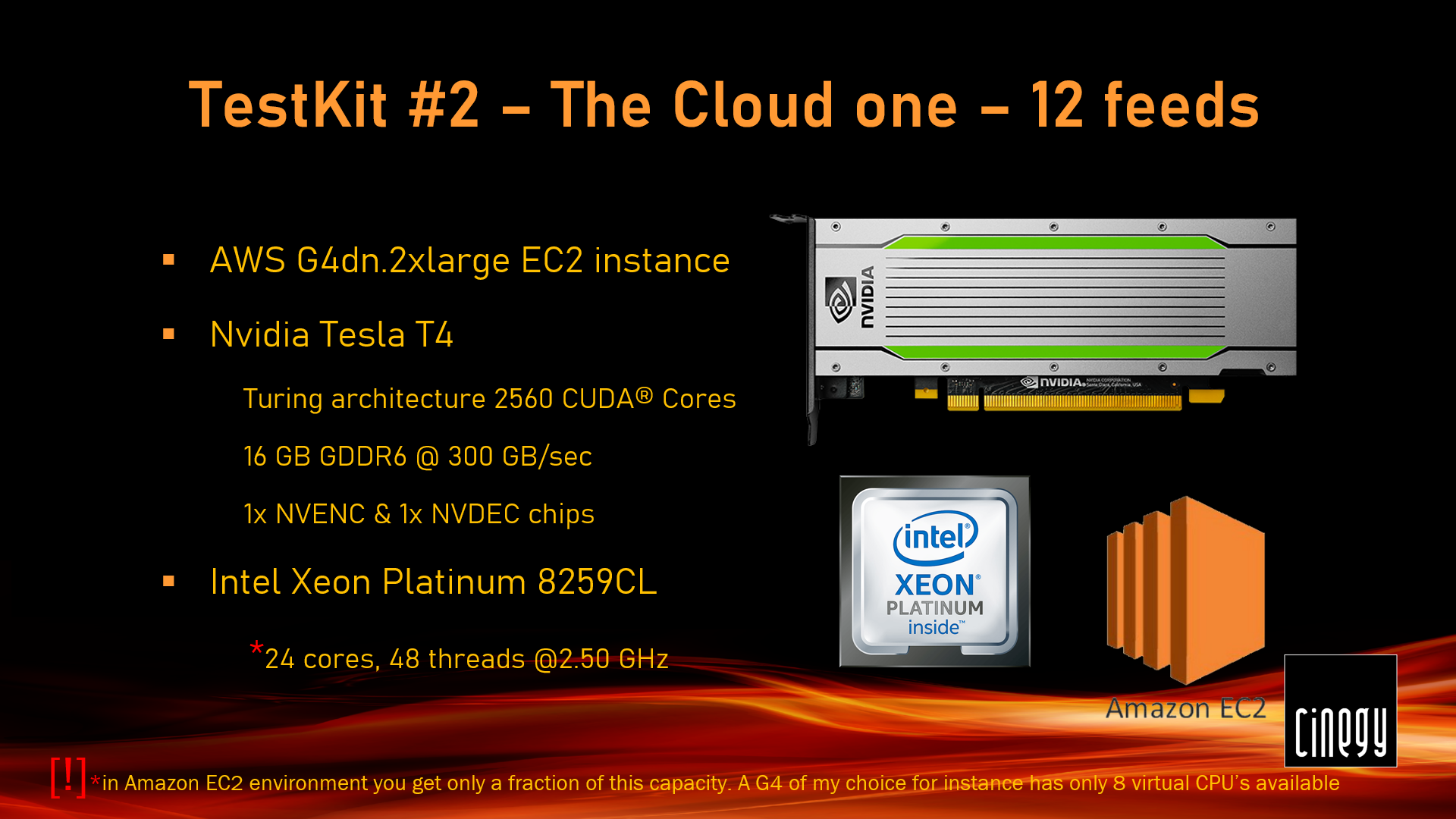

I chose an AWS G4 instance equipped with a Tesla T4 GPU and a 24-core Xeon Platinum CPU; they offer great performance for a relatively cost-effective price. Additionally, it literally takes a couple of minutes to get an EC2 instance ready for production.

Don’t believe me?! – Check out our YouTube video from last summer, where we spent literally 10 minutes using Terraform scripts automation to launch 150 playout channels in the AWS environment monitored by Cinegy Multiviewer 15.

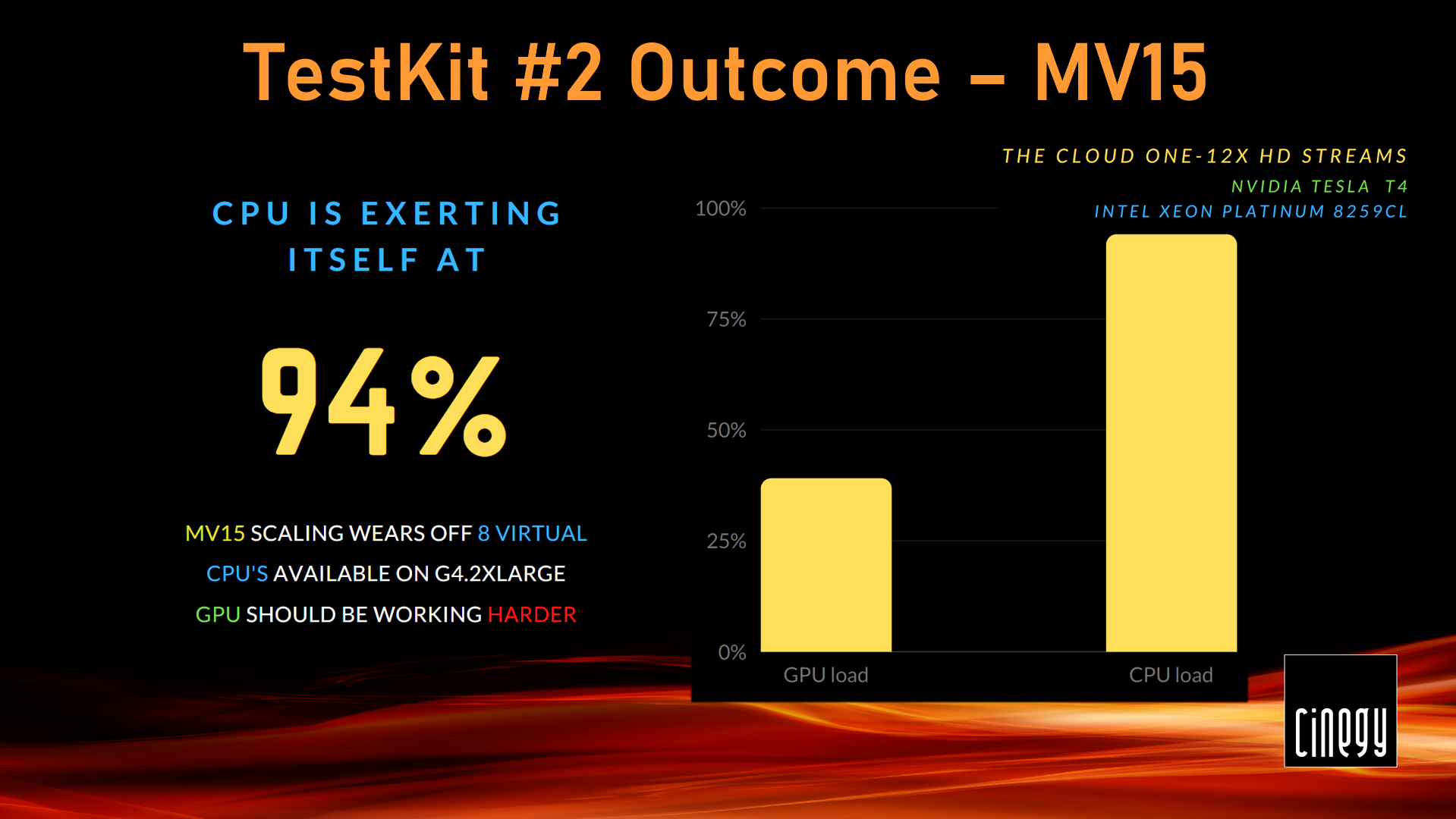

Similar to my previous test, I started with Cinegy Multiviewer 15 and fed it with 12 HD H.264 SRT streams delivered over the public Internet.

Cinegy Multiviewer 15 made that CPU work really hard at 94%, while my NVIDIA T4 was slacking off at 40% load. And even though decoding SRT streams eventually produces some CPU load, I’ve got to remind you, I set my Cinegy Multiviewer to use the GPU to decode each of those input streams!

An upgrade to Cinegy Multiviewer 22 introduced quite a dramatic shift in performance. See for yourself – CPU utilization went down to just 42%, while the GPU started working at 86%. It pretty much flipped the processing from the CPU to the GPU.

This EC2 testkit is a good indication of how an entry level GPU instance can be a great and cost-effective choice for your cloud monitoring. Running all your decoding for 12 streams on the CPU only at a comfortable load would require at least a G4.4xlarge instance at £1.55/hour.

GPU-offloaded decoding for the same number of streams is already an option for roughly a half of that price with G4.2xlarge instances, and with the optimizations introduced, it leaves even more CPU headroom to neatly carry out other operations. If you don’t care about headroom, you could potentially get away with a G4.xlarge at even lower rates.

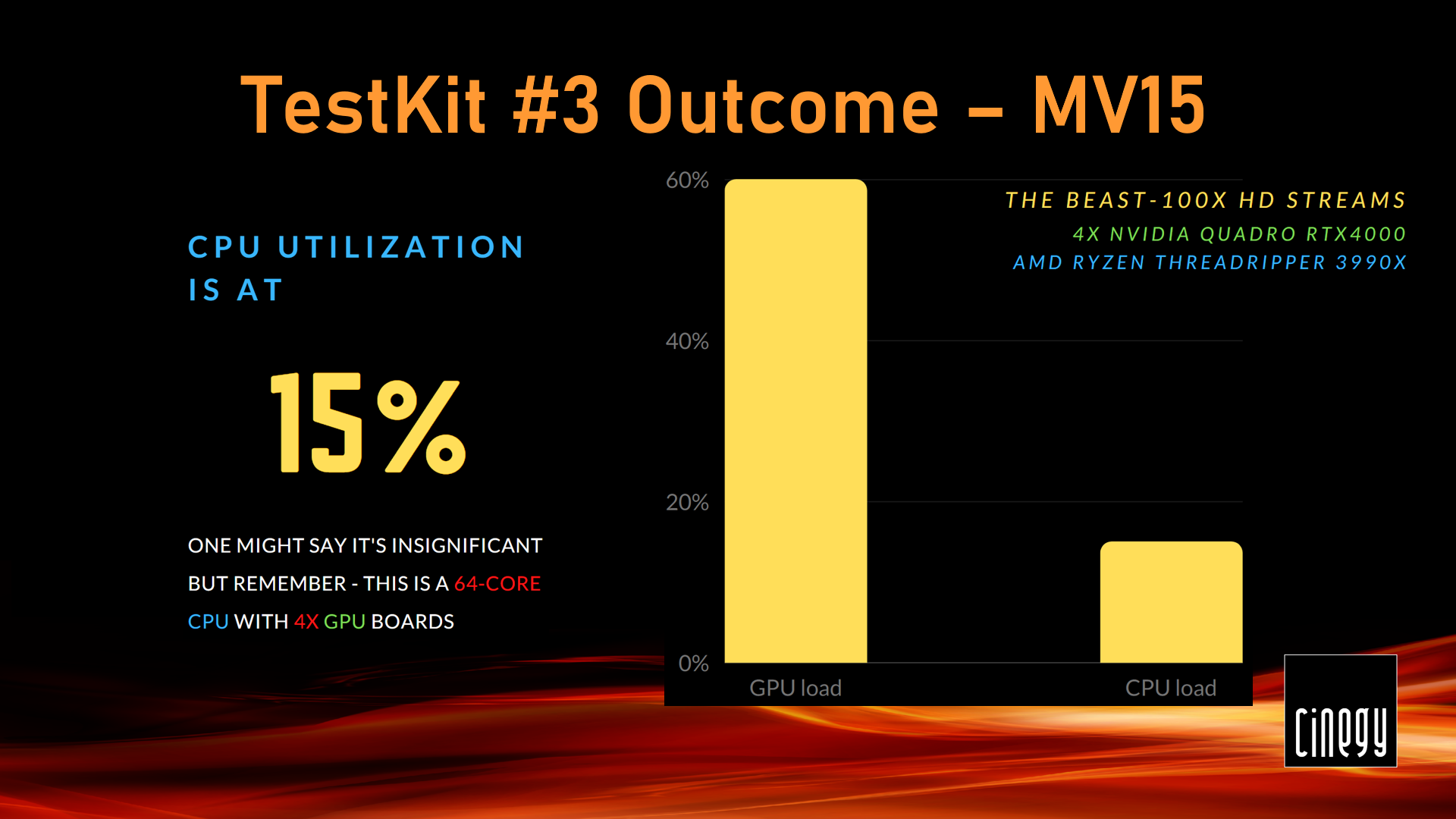

TestKit #3 – The Beast

Let’s return now to our challenge of monitoring 100 HD channels in a single box. Remember, I was using an amazing 64-core AMD Threadripper with 4 NVIDIA RTX cards. Unlike a good old P2200, which only has one NVDEC chip, each of these cards offers 2 NVDEC chips, almost twice as many CUDA cores and extra 3Gb of memory, providing a level of density required to handle that many streams in parallel.

So, I streamed 100 5MB HD, H.264 feeds to the Cinegy Multiviewer 15, letting each of the cards decode 25 streams. The CPU load was at 15% – whilst this seems low, this is definitely too much for a 64-core Threadripper with GPU offloading enabled.

At the same time, the GPUs that were supposed to be busy with some heavy lifting were taking it easy, operating at 60%.

The tiles on the Cinegy Multiviewer mosaic did not look smooth either, and the quality could be improved.

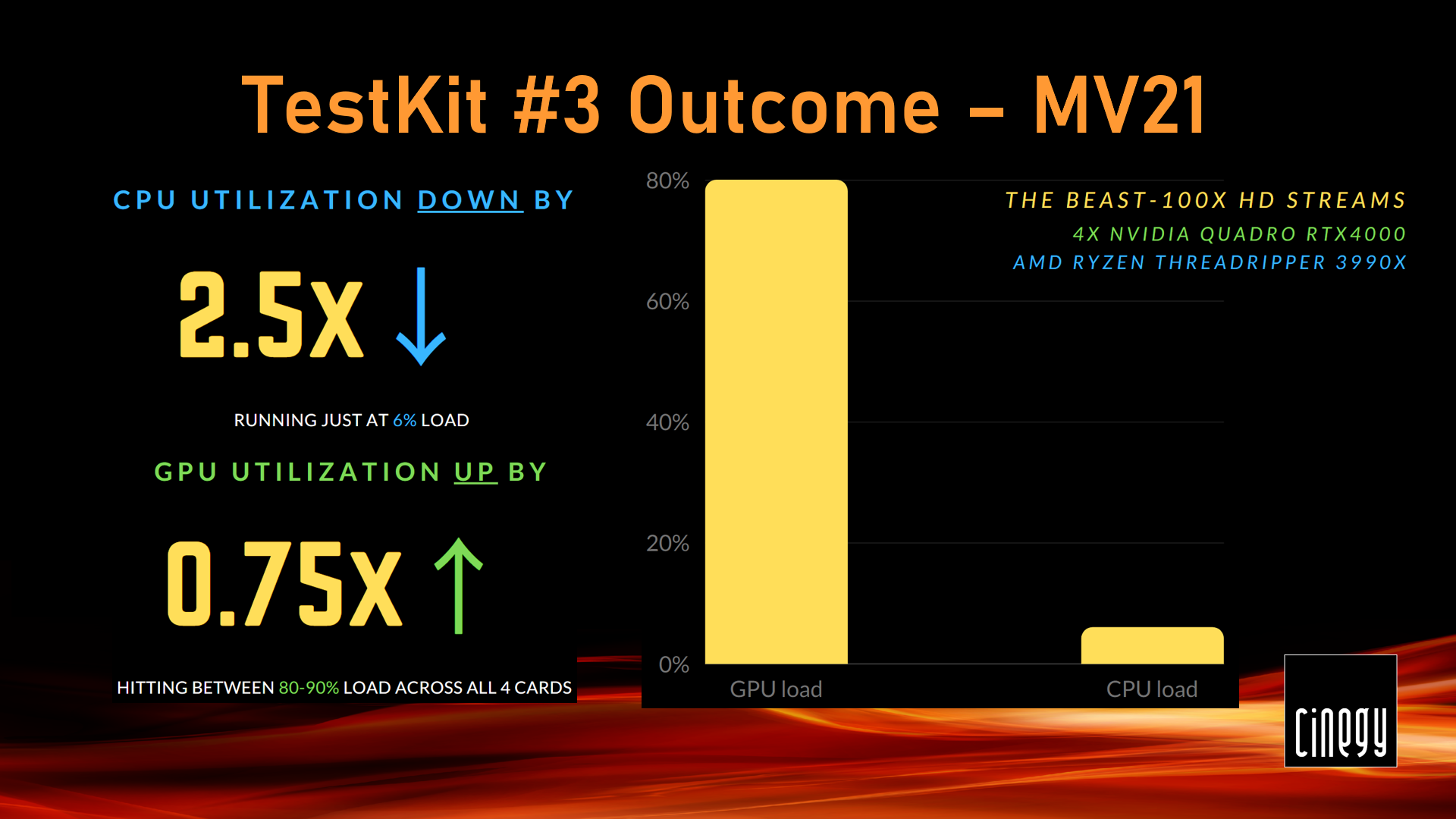

Let’s see whether we spot any improvement with the same setup running Cinegy Multiviewer v22.

The CPU load dropped down to 6%, while the GPU’s started working way harder at 80-90% each.

This resulted in Cinegy Multiviewer PiPs having pristine quality and no jitter whatsoever.

Challenge complete!

SRT Telemetry and Statistics

Cinegy Multiviewer is a product that pioneered the Cinegy telemetry portal integration and started our path to harnessing "big data" for the benefit of the broadcast industry.

Back in the days of Cinegy Multiviewer 12, we only had a notification plug-in that would collect and send Cinegy Multiviewer alerts data to a telemetry server, where it was formalized and converted into a neat graphic representation. This was great for starters, but there is always room for improvement!

Now with Cinegy Multiviewer 22, it is possible to collect real-time SRT stream metrics straight from your Cinegy Multiviewer player and submit it to a telemetry server of your choice, where they can then be represented in a clear and comprehensive graphic form.

This data is generated every second by each of the running Cinegy Multiviewer servers.

The exposure of these metrics is mapped into Cinegy Multiviewer REST API methods that would pull the data for you, whenever required.

Here are the metrics in their raw form accessed through a web browser pointed at:

Since we remain committed to allowing customers to leverage existing investment in monitoring platforms, while we provide reference examples for how to integrate with our choice (ElasticSearch with Grafana), our implementation should easily permit transformation into whatever metric system you might prefer.

However – to show you what this can look like – I’m going to focus on the standard ElasticSearch and Grafana combination we use internally.

After accommodating SRT in the Cinegy software a few years ago, with each year we witnessed more and more customers putting trust in this technology and using the public Internet for transmissions.

However, reliance on the Internet as a backbone of your transmission always involved either an element of blind faith in your connection stability or a need to use the third-party tools for SRT health analysis for an extra peace of mind.

With Cinegy Multiviewer 22.10, you can now request the statistics regarding any SRT input connections currently being decoded by a Cinegy Multiviewer server, grouped by connection. This may play a significant role during monitoring by discriminating transmission-related issues from encoding/decoding artifacts.

It can also provide insight ahead of time, that now might be a good time to switch over to the resilient circuit before you actually get to any outage!

An example of some SRT statistics in a dashboard are shown here:

An ability to push this data from Cinegy Multiviewer to telemetry automatically for each input is indeed planned for the next generation of the product, but, in the meantime, we publish a reference data ingest script in PowerShell on the Cinegy GitHub repository here.

This script uses the aforementioned REST API method to pull the SRT input feeds data from your Cinegy Multiviewer server. This data then gets pre-processed in order to form it into the structure that makes it easy to digest for Elastic Search – a data inlet that can be used in systems like Grafana for dashboarding.

This script in its current state is pretty much ready to be used in production immediately. Alternatively, you are welcome to use it as a basis for adapting any other log and data ingest pipelines you might already have.

Sounds like too much of a challenge!? – Don’t hesitate to delegate this to the Cinegy Professional Services team! We will be happy to quickly get you onboard with this by assisting you with any installation and config or (even better!) providing a training in these open-source tools to enable your own human resources for any custom scenario and get your project on rails in the shortest time!

Cinegy Multiviewer Web-control API – now more flexible

Some of you may be familiar with the Cinegy Multiviewer web-control API giving you basic control over your Cinegy Multiviewer output feeds and layouts.

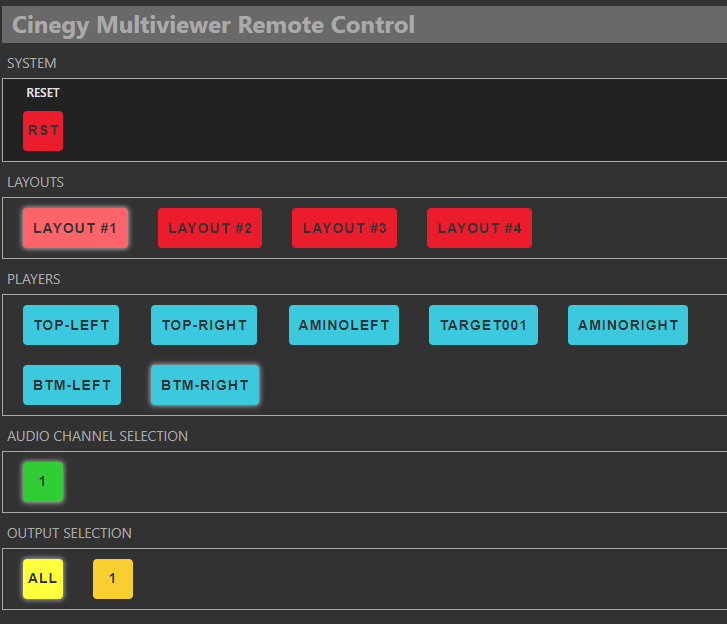

For those, who are not, here is a sample of what it looks like with our default web-page.

From the picture you may see a new "Output" section added in the bottom. Yeah, you guessed this right! It now lets you select a target output you would like to switch your layouts on.

This effectively lets you juggle your Cinegy Multiviewer layouts in a multi-layout configuration in a manner that is now even more flexible, letting you target individual outputs without switching the layouts on any others.

Needless to say, the control interface, we are offering out of the box, is only a source of basic inspiration for you to get creative – you can use this as a reference to enable an integration into your existing control board, designing a whole brand-new web interface or wiring your Cinegy Multiviewer to an external device like Elgato StreamDeck – everything is possible with the Cinegy Multiviewer API.

Additionally, you can now use API methods to fiddle with Cinegy Multiviewer player and layout names, select them, alter them, as well as set active player or layout, making the abilities of a control app virtually limitless – since Cinegy Multiviewer is quite frequently embedded in other systems and integrated by partners, this is something that will be very welcome.

Want to get into details on these API calls and many more? – Check out the link to the Cinegy Multiviewer API documentation on Cinegy Open. Want to use our expertise in designing a custom solution based on these? – shoot us an email to Cinegy Professional Services.